Deep learning applications usually involve complex optimisation problems that are often difficult to solve analytically.

copyright by analyticsindiamag.com

Often the objective function itself may not be in analytically closed-form, which means that the objective function only permits function evaluations without any gradient evaluations. This is where Zeroth-Order comes in.

Often the objective function itself may not be in analytically closed-form, which means that the objective function only permits function evaluations without any gradient evaluations. This is where Zeroth-Order comes in.

Optimisation corresponding to the above types of problems falls into the category of Zeroth-Order (ZO) optimisation with respect to the black-box models , where explicit expressions of the gradients are hard to estimate or infeasible to obtain.

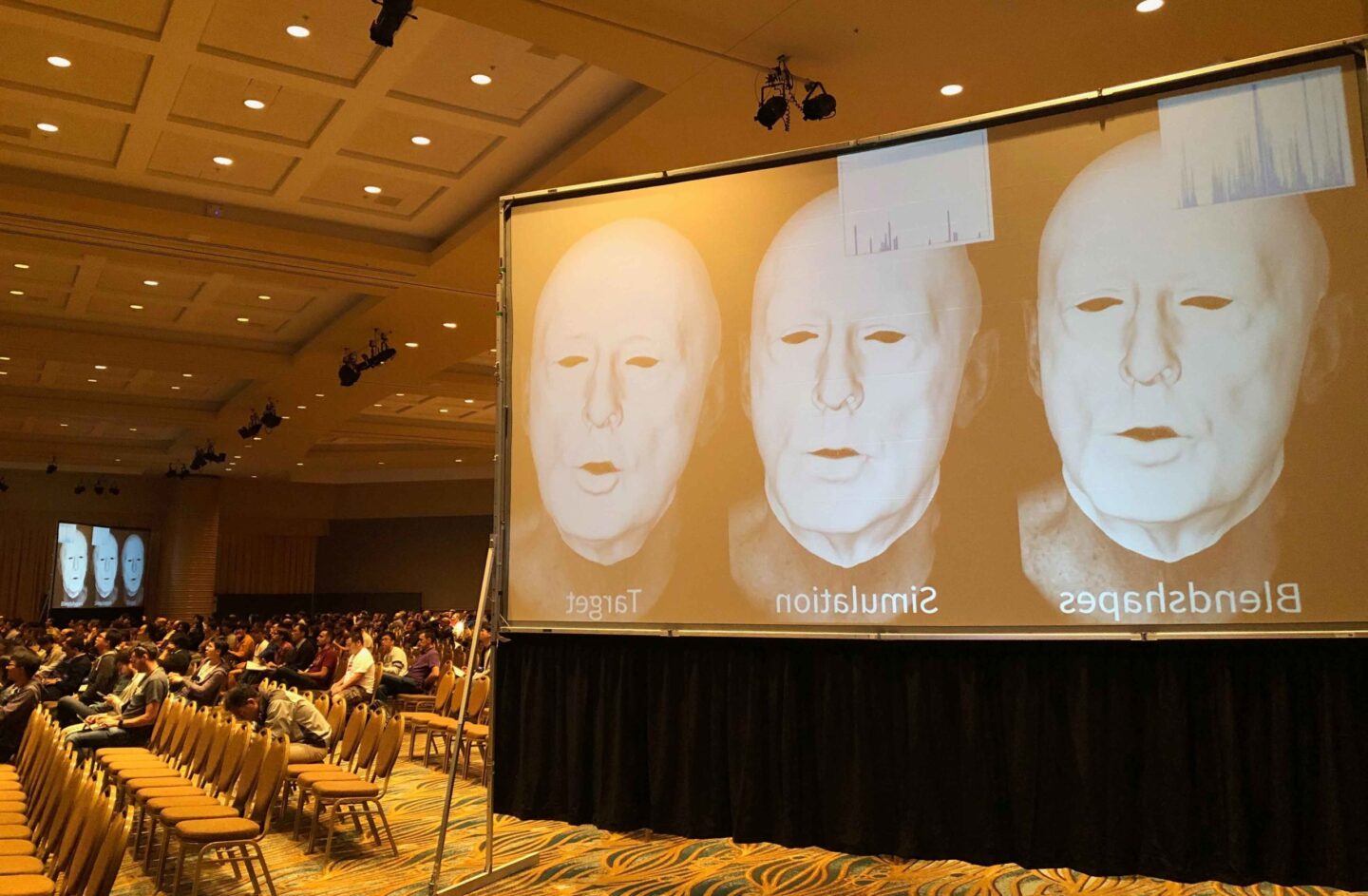

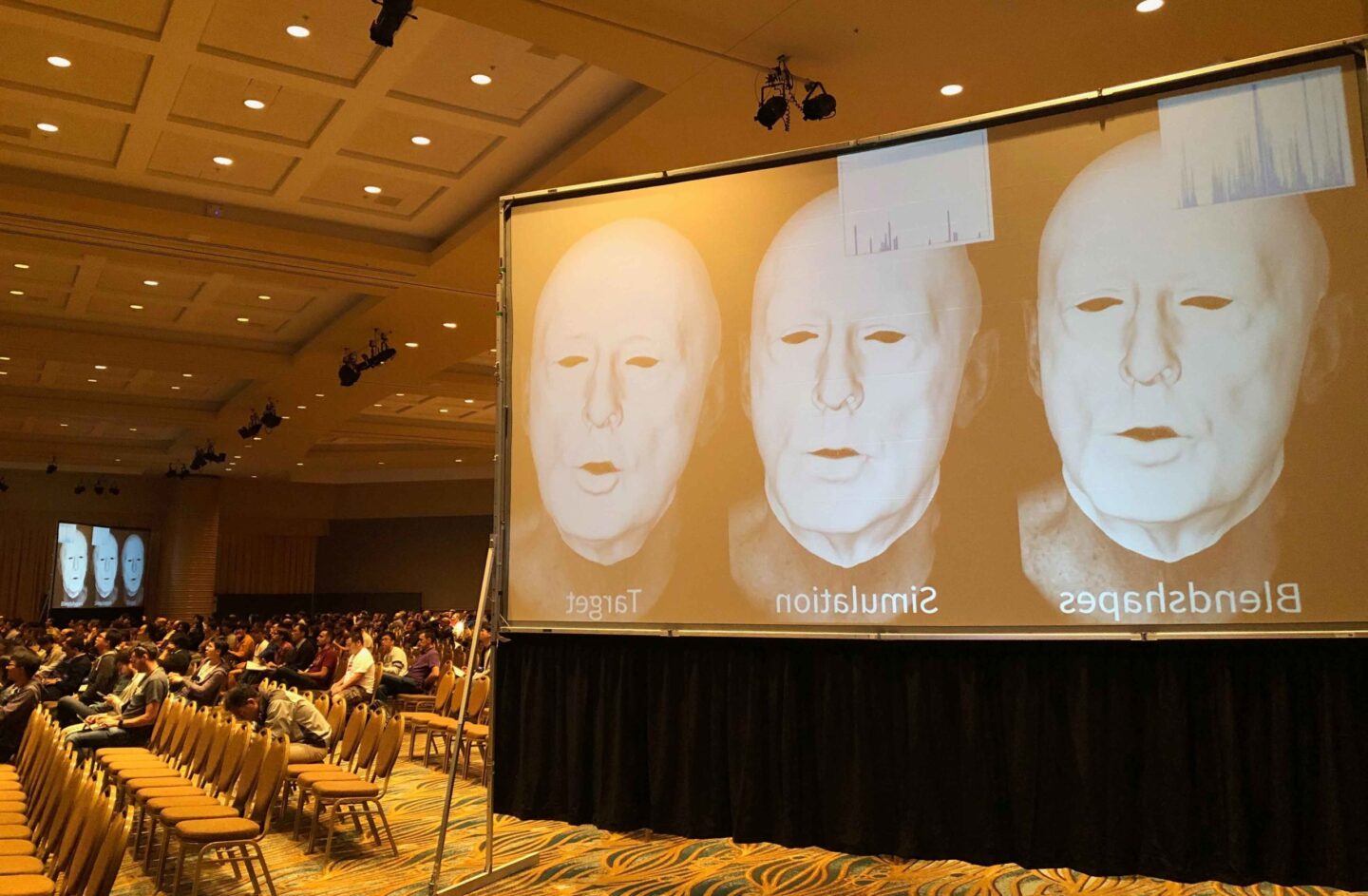

Researchers from IBM Research and MIT-IBM Watson AI Lab discussed the topic of Zeroth-Order optimisation at the on-going Computer Vision and Pattern Recognition (CVPR) 2020 conference. In this article, we will take a dive into what Zeroth-Order optimisation is and how this method can be applied in complex deep learning applications.

Behind ZO Optimisation

Zeroth-Order (ZO) optimisation is a subset of gradient-free optimisation that emerges in various signal processing as well as machine learning applications. ZO optimisation methods are basically the gradient-free counterparts of first-order (FO) optimisation techniques. ZO approximates the full gradients or stochastic gradients through function value-based gradient estimates.

Derivative-Free methods for black-box optimisation has been studied by the optimisation community for many years now. However, conventional Derivative-Free optimisation methods have two main shortcomings that include difficulties to scale to large-size problems and lack of convergence rate analysis.

ZO optimisation has the following three main advantages over the Derivative-Free optimisation methods:

Applications Of ZO Optimisation

ZO optimisation has drawn increasing attention due to its success in solving emerging signal processing and deep learning as well as machine learning problems. This optimisation method serves as a powerful and practical tool for evaluating adversarial robustness of deep learning systems.

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

According to Pin-Yu Chen, a researcher at IBM Research, Zeroth-order (ZO) optimisation achieves gradient-free optimisation by approximating the full gradient via efficient gradient estimators.

Some recent important applications include generation of prediction-evasive, black-box adversarial attacks on deep neural networks, generation of model-agnostic explanation from machine learning systems, and design of gradient or curvature regularised robust ML systems in a computationally-efficient manner. In addition, the use cases span across automated ML and meta-learning, online network management with limited computation capacity, parameter inference of black-box/complex systems, and bandit optimisation in which a player receives partial feedback in terms of loss function values revealed by her adversary.

ZO Optimisation For Adversarial Robustness In Deep Learning

Talking about the application of ZO optimisation to the generation of prediction-evasive adversarial examples to fool DL models, the researchers stated that most studies on adversarial vulnerability of deep learning had been restricted to the white-box setting where the adversary has complete access and knowledge of the target system, such as deep neural networks.

In most of the cases, the internal states or configurations and the operating mechanism of deep learning systems are not revealed to the practitioners, for instance, Google Cloud Vision API. This in result gives rise to the issues of black-box adversarial attacks where the only mode of interaction of the adversary with the system is through the submission of inputs and receiving the corresponding predicted outputs. […]

read more – copyright by analyticsindiamag.com

Deep learning applications usually involve complex optimisation problems that are often difficult to solve analytically.

copyright by analyticsindiamag.com

Optimisation corresponding to the above types of problems falls into the category of Zeroth-Order (ZO) optimisation with respect to the black-box models , where explicit expressions of the gradients are hard to estimate or infeasible to obtain.

Researchers from IBM Research and MIT-IBM Watson AI Lab discussed the topic of Zeroth-Order optimisation at the on-going Computer Vision and Pattern Recognition (CVPR) 2020 conference. In this article, we will take a dive into what Zeroth-Order optimisation is and how this method can be applied in complex deep learning applications.

Behind ZO Optimisation

Zeroth-Order (ZO) optimisation is a subset of gradient-free optimisation that emerges in various signal processing as well as machine learning applications. ZO optimisation methods are basically the gradient-free counterparts of first-order (FO) optimisation techniques. ZO approximates the full gradients or stochastic gradients through function value-based gradient estimates.

Derivative-Free methods for black-box optimisation has been studied by the optimisation community for many years now. However, conventional Derivative-Free optimisation methods have two main shortcomings that include difficulties to scale to large-size problems and lack of convergence rate analysis.

ZO optimisation has the following three main advantages over the Derivative-Free optimisation methods:

Applications Of ZO Optimisation

ZO optimisation has drawn increasing attention due to its success in solving emerging signal processing and deep learning as well as machine learning problems. This optimisation method serves as a powerful and practical tool for evaluating adversarial robustness of deep learning systems.

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

According to Pin-Yu Chen, a researcher at IBM Research, Zeroth-order (ZO) optimisation achieves gradient-free optimisation by approximating the full gradient via efficient gradient estimators.

Some recent important applications include generation of prediction-evasive, black-box adversarial attacks on deep neural networks, generation of model-agnostic explanation from machine learning systems, and design of gradient or curvature regularised robust ML systems in a computationally-efficient manner. In addition, the use cases span across automated ML and meta-learning, online network management with limited computation capacity, parameter inference of black-box/complex systems, and bandit optimisation in which a player receives partial feedback in terms of loss function values revealed by her adversary.

ZO Optimisation For Adversarial Robustness In Deep Learning

Talking about the application of ZO optimisation to the generation of prediction-evasive adversarial examples to fool DL models, the researchers stated that most studies on adversarial vulnerability of deep learning had been restricted to the white-box setting where the adversary has complete access and knowledge of the target system, such as deep neural networks.

In most of the cases, the internal states or configurations and the operating mechanism of deep learning systems are not revealed to the practitioners, for instance, Google Cloud Vision API. This in result gives rise to the issues of black-box adversarial attacks where the only mode of interaction of the adversary with the system is through the submission of inputs and receiving the corresponding predicted outputs. […]

read more – copyright by analyticsindiamag.com

Share this: