Data bias can creep into AI set up at different stages — including the problem framing, data collections and data preparation stages. The business goal a company is looking to reach will be fundamental to the framing of the problem. The goal, in itself, could be discriminatory or unfair.

copyright by www.forbes.com

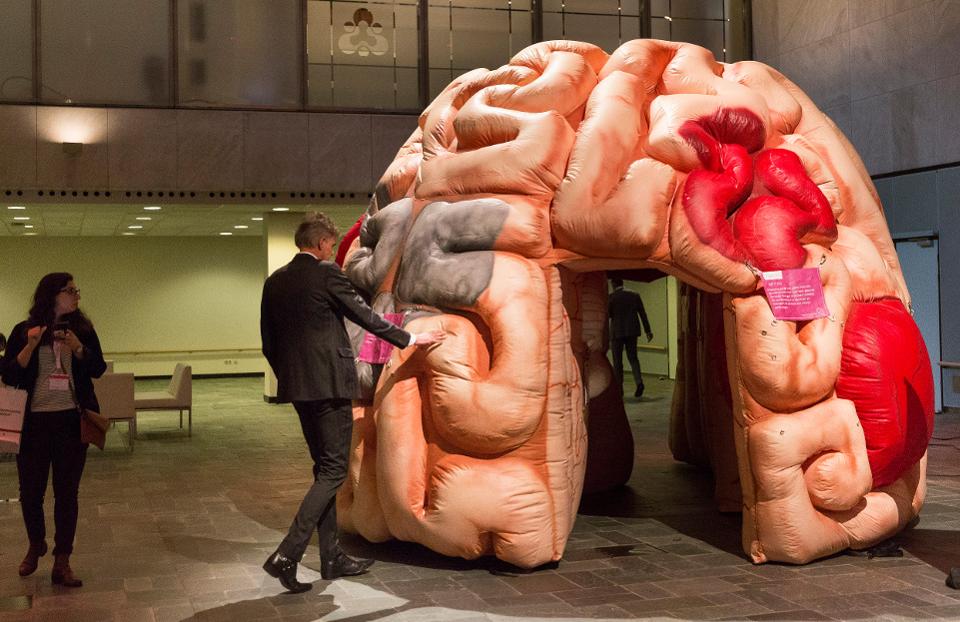

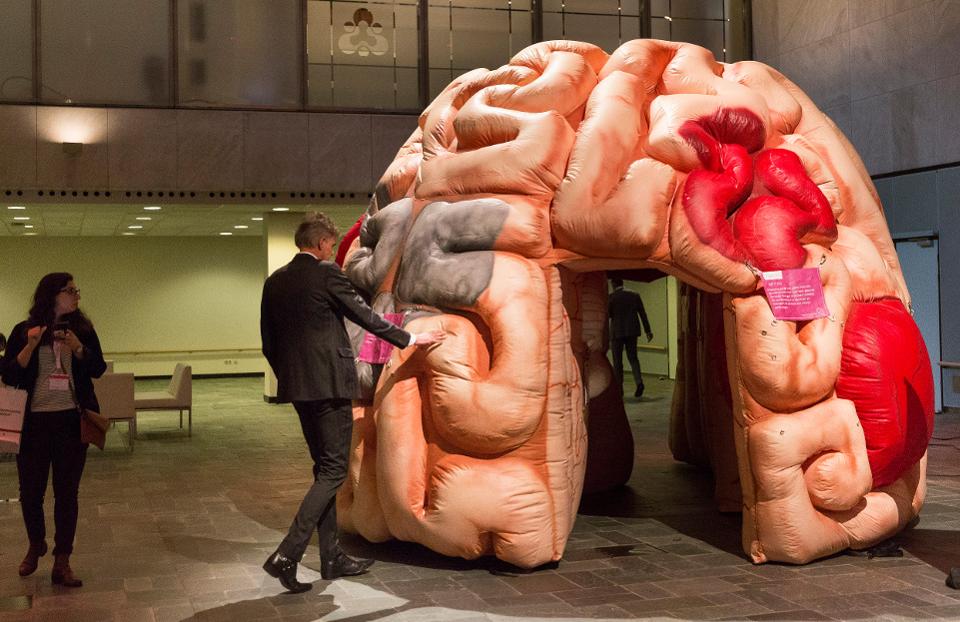

Data quality concerns continue to plague artificial intelligence. But blockchain-based incentive … [+] The Covid-19 outbreak has overwhelmed health systems around the world. At a point, bed spaces and ventilators for patients as well as protective gear for health workers were not enough to go around. This meant that health systems, especially in developed countries, had to employ certain technologies to allocate resources efficiently. AI is one of those and its importance in the fight against coronavirus continues to grow.

Data quality concerns continue to plague artificial intelligence. But blockchain-based incentive … [+] The Covid-19 outbreak has overwhelmed health systems around the world. At a point, bed spaces and ventilators for patients as well as protective gear for health workers were not enough to go around. This meant that health systems, especially in developed countries, had to employ certain technologies to allocate resources efficiently. AI is one of those and its importance in the fight against coronavirus continues to grow.

An app, developed by researchers at New York University, which uses AI and big data to predicts the severity of Covid-19 cases is a good example of how the technology helps in resource allocation, at least in theory. The researchers used patient data from 160 hospitals in Wuhan, China to identify four biomarkers that were significantly higher in patients who died of the virus versus those who recovered. Based on the data fed into the AI model, the app assigns a severity score for patients, which a clinician can use to make informed care and resource allocation decisions.

Despite the positive impact that AI could bring to the coronavirus battlefield, the flaws in the underlying data being employed could deepen the inequities that already exist across gender and racial groups, wrote Genevieve Smith and Ishita Rustagi, both of the Center for Equity, Gender and Leadership at the UC Berkeley Haas School of Business, in an article published in the Stanford Social Innovation Review.

Interestingly, these data reliability concerns aren’t native to the coronavirus era. In fact, AI, along with its subsets of machine learning and deep learning, just to name a few, is plagued by the data bias and data quality conundrum.

The main discussion here is about how blockchain could help in tackling these data reliability concerns. But it’d be valuable to first understand the source of data bias.

How Data Bias Crawls Into Artificial Intelligence

Data bias can creep into AI set up at different stages — including the problem framing, data collections and data preparation stages. The business goal a company is looking to reach will be fundamental to the framing of the problem. The goal, in itself, could be discriminatory or unfair.

Also, during the data collection stage, bias could slip in by collecting data that’s either unrepresentative of the reality or reflective of existing prejudices. If, for instance, you feed a deep learning model with more photos of a specific skin color over another, the subsequent facial recognition system will fare better at identifying the skin color predominant in the training data.

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

With regards to collecting data that reflect existing prejudices, Amazon AMZN reportedly ditched an AI-based recruitment system after finding that it was biased against women. Bringing it back to healthcare, an algorithm used by many U.S. hospitals to forecast risk and subsequently allocate resources favored white patients over blacks for the same disease affliction, a group of researchers found in 2019.

Two Blockchain-Based Approaches for Improving Data Quality

The deeper you dig, the more of these biases you’ll find. There isn’t a single solution to these issues given their complexity. One thing that experts agree on, though, is the need for data diversity. To achieve this data diversity, improved transparency of data as well as robust collaboration could improve the situation. Here enters blockchain technology. By design, the technology works only through collaboration between several parties to maintain the network. This could bring transparency, decentralization and verifiability to machine learning models and the data they’re fed.

Incentivizing The Contribution of Quality Training Data

Last year, Microsoft MSFT introduced an initiative called Decentralized & Collaborative AI on Blockchain. The goal is to leverage public blockchains, ethereum in this case, for collaborative and continuous model training and maintenance. A key component of this is developing a mechanism that incentivizes participants to contribute “good data,” according to Justin Harris, a senior software developer at Microsoft, who works on this initiative.

In this system, participants must commit a certain amount up front to the smart contract to contribute their data for the training. If the system determines that the data is good — i.e., meets certain requirements, they get a refund. Contributing bad data would, therefore, result in the loss of the initial commitment, with the funds distrusted to contributors of good data. […]

copyright by www.forbes.com

Data bias can creep into AI set up at different stages — including the problem framing, data collections and data preparation stages. The business goal a company is looking to reach will be fundamental to the framing of the problem. The goal, in itself, could be discriminatory or unfair.

copyright by www.forbes.com

An app, developed by researchers at New York University, which uses AI and big data to predicts the severity of Covid-19 cases is a good example of how the technology helps in resource allocation, at least in theory. The researchers used patient data from 160 hospitals in Wuhan, China to identify four biomarkers that were significantly higher in patients who died of the virus versus those who recovered. Based on the data fed into the AI model, the app assigns a severity score for patients, which a clinician can use to make informed care and resource allocation decisions.

Despite the positive impact that AI could bring to the coronavirus battlefield, the flaws in the underlying data being employed could deepen the inequities that already exist across gender and racial groups, wrote Genevieve Smith and Ishita Rustagi, both of the Center for Equity, Gender and Leadership at the UC Berkeley Haas School of Business, in an article published in the Stanford Social Innovation Review.

Interestingly, these data reliability concerns aren’t native to the coronavirus era. In fact, AI, along with its subsets of machine learning and deep learning, just to name a few, is plagued by the data bias and data quality conundrum.

The main discussion here is about how blockchain could help in tackling these data reliability concerns. But it’d be valuable to first understand the source of data bias.

How Data Bias Crawls Into Artificial Intelligence

Data bias can creep into AI set up at different stages — including the problem framing, data collections and data preparation stages. The business goal a company is looking to reach will be fundamental to the framing of the problem. The goal, in itself, could be discriminatory or unfair.

Also, during the data collection stage, bias could slip in by collecting data that’s either unrepresentative of the reality or reflective of existing prejudices. If, for instance, you feed a deep learning model with more photos of a specific skin color over another, the subsequent facial recognition system will fare better at identifying the skin color predominant in the training data.

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

With regards to collecting data that reflect existing prejudices, Amazon AMZN reportedly ditched an AI-based recruitment system after finding that it was biased against women. Bringing it back to healthcare, an algorithm used by many U.S. hospitals to forecast risk and subsequently allocate resources favored white patients over blacks for the same disease affliction, a group of researchers found in 2019.

Two Blockchain-Based Approaches for Improving Data Quality

The deeper you dig, the more of these biases you’ll find. There isn’t a single solution to these issues given their complexity. One thing that experts agree on, though, is the need for data diversity. To achieve this data diversity, improved transparency of data as well as robust collaboration could improve the situation. Here enters blockchain technology. By design, the technology works only through collaboration between several parties to maintain the network. This could bring transparency, decentralization and verifiability to machine learning models and the data they’re fed.

Incentivizing The Contribution of Quality Training Data

Last year, Microsoft MSFT introduced an initiative called Decentralized & Collaborative AI on Blockchain. The goal is to leverage public blockchains, ethereum in this case, for collaborative and continuous model training and maintenance. A key component of this is developing a mechanism that incentivizes participants to contribute “good data,” according to Justin Harris, a senior software developer at Microsoft, who works on this initiative.

In this system, participants must commit a certain amount up front to the smart contract to contribute their data for the training. If the system determines that the data is good — i.e., meets certain requirements, they get a refund. Contributing bad data would, therefore, result in the loss of the initial commitment, with the funds distrusted to contributors of good data. […]

copyright by www.forbes.com

Share this: