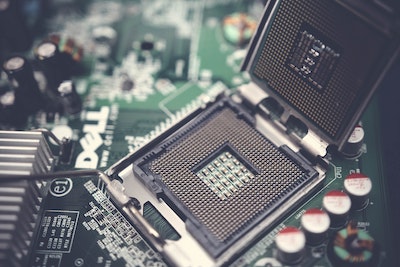

The microcomputer revolution of the 1970s triggered a Wild West-like expansion of personal computers in the 1980s.

copyright by www.seas.harvard.edu

Over the course of the decade, dozens of personal computing devices, from Atari to Xerox Alto, flooded into the market. CPUs and microprocessors advanced rapidly, with new generations coming out on a monthly basis.

Over the course of the decade, dozens of personal computing devices, from Atari to Xerox Alto, flooded into the market. CPUs and microprocessors advanced rapidly, with new generations coming out on a monthly basis.

Amidst all that growth, there was no standard method to compare one computer’s performance against another. Without this, not only would consumers not know which system was better for their needs but computer designers didn’t have a standard method to test their systems.

That changed in 1988, when the Standard Performance Evaluation Corporation (SPEC) was established to produce, maintain and endorse a standardized set of performance benchmarks for computers. Think of benchmarks like standardized tests for computers. Like the SATs or TOEFL, benchmarks are meant to provide a method of comparison between similar participants by asking them to perform the same tasks.

Since SPEC, dozens of benchmarking organizations have sprung up to provide a method of comparing the performance of various systems across different chip and program architecture.

Today, there is a new Wild West in machine learning. Currently, there are at least 40 different hardware companies poised to break ground in new AI processor architectures.

“Some of these companies will rise but many will fall,” said Vijay Janapa Reddi, Associate Professor of Electrical Engineering at the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS). “The challenge is how can we tell if one piece of hardware is better than another? That’s where benchmark standards become important.”

Janapa Reddi is one of the leaders of MLPerf, a machine learning benchmarking suite. ML Perf began as a collaboration between researchers at Baidu, Berkeley, Google, Harvard, and Stanford and has grown to include many companies, a host of universities, along with hundreds of individual participants worldwide. Other Harvard contributors include David Brooks, the Haley Family Professor of Computer Science at SEAS and Gu-Yeon Wei, the Robert and Suzanne Case Professor of Electrical Engineering and Computer Science at SEAS.

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

The goal of ML Perf is to create a benchmark for measuring the performance of machine learning software frameworks, machine learning hardware accelerators, and machine learning cloud and edge computing platforms.

We spoke to Janapa Reddi about MLPerf and the future of benchmarking for machine learning.

SEAS: First, how does benchmarking for machine learning work?

Janapa Reddi: In its simplest form, a benchmark standard is a strict definition of a machine learning task, let’s say image classification. Using a model that implements that task, such as ResNet50, and a dataset, such as COCO or ImageNet, the model is evaluated with a target accuracy or quality metric that it must achieve when it is executed with the dataset.[…]

read more – copyright by www.seas.harvard.edu

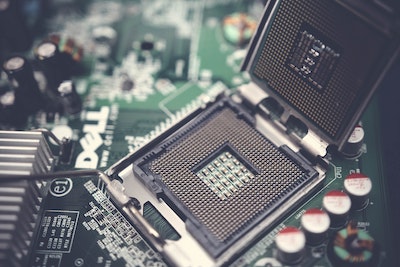

The microcomputer revolution of the 1970s triggered a Wild West-like expansion of personal computers in the 1980s.

copyright by www.seas.harvard.edu

Amidst all that growth, there was no standard method to compare one computer’s performance against another. Without this, not only would consumers not know which system was better for their needs but computer designers didn’t have a standard method to test their systems.

That changed in 1988, when the Standard Performance Evaluation Corporation (SPEC) was established to produce, maintain and endorse a standardized set of performance benchmarks for computers. Think of benchmarks like standardized tests for computers. Like the SATs or TOEFL, benchmarks are meant to provide a method of comparison between similar participants by asking them to perform the same tasks.

Since SPEC, dozens of benchmarking organizations have sprung up to provide a method of comparing the performance of various systems across different chip and program architecture.

Today, there is a new Wild West in machine learning. Currently, there are at least 40 different hardware companies poised to break ground in new AI processor architectures.

“Some of these companies will rise but many will fall,” said Vijay Janapa Reddi, Associate Professor of Electrical Engineering at the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS). “The challenge is how can we tell if one piece of hardware is better than another? That’s where benchmark standards become important.”

Janapa Reddi is one of the leaders of MLPerf, a machine learning benchmarking suite. ML Perf began as a collaboration between researchers at Baidu, Berkeley, Google, Harvard, and Stanford and has grown to include many companies, a host of universities, along with hundreds of individual participants worldwide. Other Harvard contributors include David Brooks, the Haley Family Professor of Computer Science at SEAS and Gu-Yeon Wei, the Robert and Suzanne Case Professor of Electrical Engineering and Computer Science at SEAS.

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

The goal of ML Perf is to create a benchmark for measuring the performance of machine learning software frameworks, machine learning hardware accelerators, and machine learning cloud and edge computing platforms.

We spoke to Janapa Reddi about MLPerf and the future of benchmarking for machine learning.

SEAS: First, how does benchmarking for machine learning work?

Janapa Reddi: In its simplest form, a benchmark standard is a strict definition of a machine learning task, let’s say image classification. Using a model that implements that task, such as ResNet50, and a dataset, such as COCO or ImageNet, the model is evaluated with a target accuracy or quality metric that it must achieve when it is executed with the dataset.[…]

read more – copyright by www.seas.harvard.edu

Share this: