Artificial intelligence is by turns terrifying, overhyped, hard to understand and just plain awesome.

copyright by www.nytimes.com

For an example of the last, researchers at the University of California, San Francisco were able this year to hook people up to brain monitors and generate natural-sounding synthetic speech out of mere brain activity. The goal is to give people who have lost the ability to speak — because of a stroke, A.L.S., epilepsy or something else — the power to talk to others just by thinking.

For an example of the last, researchers at the University of California, San Francisco were able this year to hook people up to brain monitors and generate natural-sounding synthetic speech out of mere brain activity. The goal is to give people who have lost the ability to speak — because of a stroke, A.L.S., epilepsy or something else — the power to talk to others just by thinking.

That’s pretty awesome.

One area where A.I. can most immediately improve our lives may be in the area of mental health. Unlike many illnesses, there’s no simple physical test you can give someone to tell if he or she is suffering from depression.

Primary care physicians can be mediocre at recognizing if a patient is depressed, or at predicting who is about to become depressed. Many people contemplate suicide, but it is very hard to tell who is really serious about it. Most people don’t seek treatment until their illness is well advanced.

Using A.I., researchers can make better predictions about who is going to get depressed next week, and who is going to try to kill themselves.

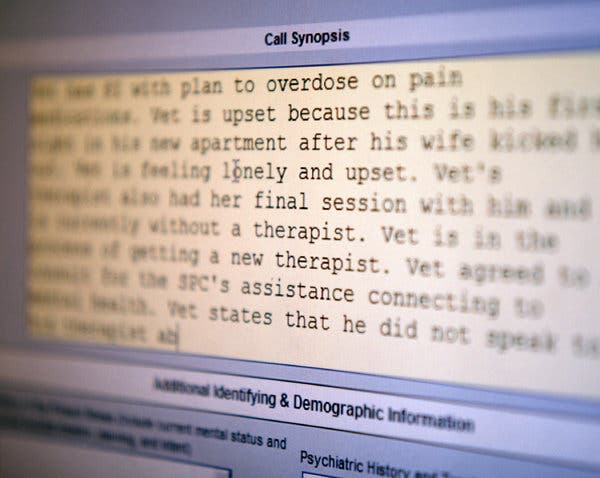

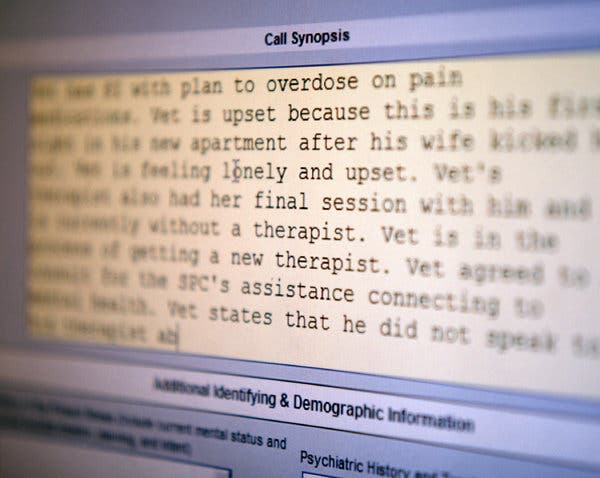

The Crisis Text Line is a suicide prevention hotline in which people communicate through texting instead of phone calls. Using A.I. technology, the organization has analyzed more than 100 million texts it has received. The idea is to help counselors understand who is really in immediate need of emergency care.

You’d think that the people most in danger of harming themselves would be the ones who use words like “suicide” or “die” most often. In fact, a person who uses words like “ibuprofen” or “Advil” is 14 times more likely to need emergency services than a person who uses “suicide.” A person who uses the crying face emoticon is 11 times more likely to need an active rescue than a person who uses “suicide.”

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

On its website, the Crisis Text Line posts the words that people who are seriously considering suicide frequently use in their texts. A lot of them seem to be all-or-nothing words: “never,” “everything,” “anymore,” “always.”

Many groups are using A.I. technology to diagnose and predict depression. For example, after listening to millions of conversations, machines can pick out depressed people based on their speaking patterns.

When people suffering from depression speak, the range and pitch of their voice tends to be lower. There are more pauses, starts and stops between words. People whose voice has a breathy quality are more likely to reattempt suicide. Machines can detect this stuff better than humans.[…]

read more – copyright by www.nytimes.com

Photo copyright: Ashley Gilbertson, VII Network for The New York Times

Artificial intelligence is by turns terrifying, overhyped, hard to understand and just plain awesome.

copyright by www.nytimes.com

That’s pretty awesome.

One area where A.I. can most immediately improve our lives may be in the area of mental health. Unlike many illnesses, there’s no simple physical test you can give someone to tell if he or she is suffering from depression.

Primary care physicians can be mediocre at recognizing if a patient is depressed, or at predicting who is about to become depressed. Many people contemplate suicide, but it is very hard to tell who is really serious about it. Most people don’t seek treatment until their illness is well advanced.

Using A.I., researchers can make better predictions about who is going to get depressed next week, and who is going to try to kill themselves.

The Crisis Text Line is a suicide prevention hotline in which people communicate through texting instead of phone calls. Using A.I. technology, the organization has analyzed more than 100 million texts it has received. The idea is to help counselors understand who is really in immediate need of emergency care.

You’d think that the people most in danger of harming themselves would be the ones who use words like “suicide” or “die” most often. In fact, a person who uses words like “ibuprofen” or “Advil” is 14 times more likely to need emergency services than a person who uses “suicide.” A person who uses the crying face emoticon is 11 times more likely to need an active rescue than a person who uses “suicide.”

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

On its website, the Crisis Text Line posts the words that people who are seriously considering suicide frequently use in their texts. A lot of them seem to be all-or-nothing words: “never,” “everything,” “anymore,” “always.”

Many groups are using A.I. technology to diagnose and predict depression. For example, after listening to millions of conversations, machines can pick out depressed people based on their speaking patterns.

When people suffering from depression speak, the range and pitch of their voice tends to be lower. There are more pauses, starts and stops between words. People whose voice has a breathy quality are more likely to reattempt suicide. Machines can detect this stuff better than humans.[…]

read more – copyright by www.nytimes.com

Photo copyright: Ashley Gilbertson, VII Network for The New York Times

Share this: