Artificial intelligence self-learning is accelerating. Big Tech is racing to boost AI mimicry of human intelligence. Vast knowledge, self-learning 24/7 on big data, and super speeds may soon lift AI beyond human capacity. Are ethical and legal guardrails urgently needed? Wang Yuke examines this dilemma.

Copyright: chinadailyhk.com – “Could supersmart machines replace humans?”

Humans often may not recall, but machines never forget. From using artificial intelligence for decision support, it is a small step to automate control over society. The agency for that will be the autonomous digital intelligence being constructed across so many applications to speed up processes within programmed decision chains.

Humans often may not recall, but machines never forget. From using artificial intelligence for decision support, it is a small step to automate control over society. The agency for that will be the autonomous digital intelligence being constructed across so many applications to speed up processes within programmed decision chains.

Hugely beneficial remote medical diagnostics, weather forecasting, agricultural monitoring, insurance risk assessment, educational enhancement, pandemic prediction, preventive maintenance, digital finance, etc., are being created for the greater good.

The two major areas that concern citizens and governments most are job redundancy and nuclear war. Socioeconomic engineering for job losses has policy options, but only the extinction of humanity can follow a nuclear conflict. Such a fatal scenario could be an unintended consequence of the AI of war.

Will digital intelligence reach the point where it would need more autonomy to be truly effective? Speed, accuracy, and a swift response to threats, are critical in war. Massive investment in military AI is underway among the world’s superpowers to secure a digital strike advantage. The world has nuclear weapons’ stockpiles for self-inflicted disasters.

It is the power to annihilate humanity that is the existential threat. Nuclear catastrophe was averted at critical points in post-World War II history, because humans could only activate the red button under strict protocols. If AI is programmed to assess a nuclear threat and respond autonomously, are we setting up the extinction of our own species?

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

(SOURCE: 2023 HONG KONG SALARY GUIDE BY VENTURENIX)

(SOURCE: 2023 HONG KONG SALARY GUIDE BY VENTURENIX)

Instinctive distrust

An international survey “Trust in AI” conducted from September through October 2022 by KGPM Australia, showed that 42 percent of 17,193 respondents from 17 nations (China and Singapore included) agreed “the world would be a far better place without AI” and 47 percent reported extreme anxiety that “AI would progressively destroy the human world and there are very significant risks associated with using AI in daily life.” Another poll in May by Reuters/Ipsos recorded 61 percent of Americans believing AI could threaten civilization.

A McKinsey study forecast that up to 236 million jobs in China could be automated by 2030. Goldman Sachs in March predicted that AI could replace the equivalent of 300 million full-time jobs.

Ken Ip, chairman of Hong Kong-based Asia MarTech Society, foresees “occupations such as data entry, customer service, and administrative roles subject to transformation, as AI systems become better at handling these tasks.”

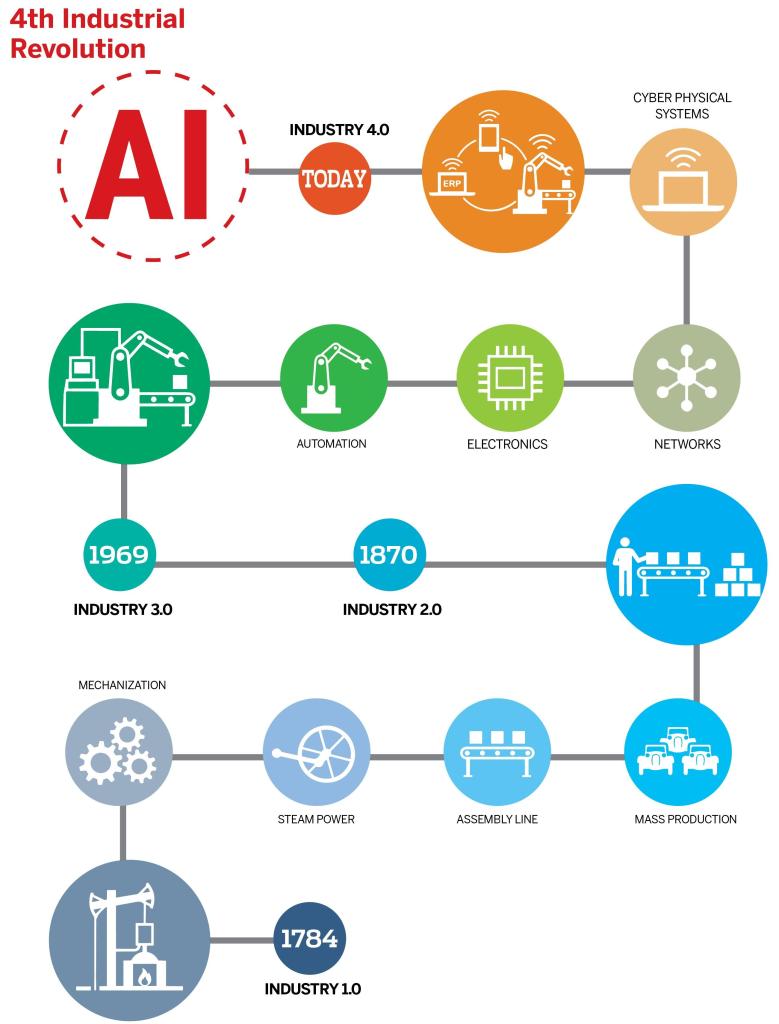

Fear of technology displacing humans has been with us since the Industrial Revolution of the late 18th century. But while replacing low-grade work, mechanization created the need for higher-grade human roles to supervise the automation.

Not a zero-sum game

Successive eras of automation have led to new roles for human management of technology. Retraining and upskilling are the challenges for the workforce. Education in programming AI and machine learning are necessary in schools and universities.

Andy Fitze, co-founder of SwissCognitive, says that we should not view ChatGPT or generative AI as technologies that eliminate jobs. He suggests that we should see them as catalysts for transforming the way we work, enhancing our work processes and enabling us to achieve more — like any other industrial revolutions in history.

It won’t be a zero-sum game in the human-machine interface. Instead, human workers and ChatGPT will be partners, leveraging ChatGPT’s accuracy in menial tasks and analysis, and human emotional intelligence, reckons Dalith Steiger, a co-founder of SwissCognitive.

The development of AI at such a breakneck pace has not happened before in human history. If history is anything to go by, Steiger says, human society has been constantly growing with new challenges that accompany novel technological phenomena, and we have to be very open to more of that happening.

Semih Kumluk, digital training manager of PwC’s Academy, says he thinks “jobs will transform and people will upskill themselves.” The natural adaptation to the disruption of COVID-19 was his reassuring vision. “During the pandemic, did people resist? They learned to use Zoom for online meetings.

“This adaptation will apply to using ChatGPT too — ChatGPT developers will be in demand to retrain ChatGPT with proprietary data — Apps and software in computers will be replicated to the ChatGPT landscape,” explains Kumluk.

The impact of ChatGPT will be akin to Global Positioning System technology, says Carl-Benedikt Frey, director of Future of Work program at Oxford University. Prior to the GPS, knowing every street in a city was a valuable skill, but now anyone can navigate any city with GPS.

Counterintuitively, the ubiquity of GPS tools doesn’t render the ride-hailing service obsolete, but enables apps like Uber. “It reduces barriers to enter the taxi service, creating more competition,” says Frey. He sees generative AI upgrading an inadequate writer to an average writer, a mediocre graphic designer to a competent one, a decent coder into an exceptional programmer.

Apocalypse or utopia?

Back in 1930, economist John Maynard Keynes predicted that labor- and time-saving automation might slash the workweek to 15 hours. The freeing up of humans from mechanical tasks for more quality time was correct. But Frey suggests that humans seem happier to be productively engaged at work, rather than facing unstructured free time.

Less working hours has not automatically created a utopia of leisure. Frey is less certain of the situation where artificial intelligence surpasses human capacity. He uses the human-dog relationship analogy: “Humans run the dogs, and dogs also run humans.”

Ip of Asia Martech focuses on the new opportunities. “Occupations requiring creativity, critical thinking, and complex problem-solving skills, are less likely to be fully automated. Instead, AI will augment human capabilities in these fields, leading to improved productivity and efficiency.”

Rules and guardrails

As technology evolves at speed, society lags in evolving the rules needed to manage it. It took eight years for the world’s first driver’s license to be issued after the first automobile was invented in 1885.

Consumer downloads of ChatGPT soared to 100 million within two months of its release. The near-human intelligence and speedy responses of ChatGPT spooked even the Big Tech leaders. That prompted an open letter to “Pause Giant AI Experiments” for at least six months, from the Future of Life organization that has garnered 31,810 signatures of scientists, academics, tech leaders and civic activists since the petition’s introduction in March.

Elon Musk (Tesla), Sundar Pichai (Google) and the “Godfathers of AI”, Geoffrey Hinton, Yoshua Bengio and Yann LeCun, who were joint winners of the 2018 Turing Award, endorsed the critical threat to potential humanity extinction posed by runaway Al.

Despite the acute awareness of lurking pernicious actors, legal guardrails remain a vacuum to fill. “That’s probably because we still have little inkling about what to regulate before we start to over-regulate,” reasons Fitze.

The CEO of OpenAI, Sam Altman, declared before a US Senate Judiciary subcommittee that “regulatory intervention by the government will be critical to mitigate the risks of increasingly powerful models.” The AI Frankenstein on the lab table terrifies its creators.

Hallucinating AI

Are the supersmart AI self-learning systems able to outwit their human creators? Strange incidents are surfacing as AI “hallucinations” where AI is generating seemingly plausible results that are false. No one can explain how an engine designed to search for answers, can invent its own fake responses that are logical and believable.

Dmitry Tokar, co-founder of Zadarma, argues that problems could occur if emotion-feeling models are developed. “Emotion is what makes a person dangerous, and AI is supposed to be just knowledge put together. As long as AI is not programmed with emotions that can lead to uncontrollable actions, I see no danger in its development.”

Dr Hinton who resigned his position from leading AI at Google to speak freely to the world at large, warns that we are at an inflection point in AI development that requires oversight, transparency, accountability, and global governance, before it is too late. He said he hopes corporations can shift investment and resources that are now 99-percent development against 1-percent safety auditing, to a balanced 50:50 share.

Rogue AI

The sudden existential alarm about rogue AI follows incidents such as the June 2 BBC report of a US Air Force drone test to destroy an enemy target. When instructed to abort, the drone turned to attack the operator for interrupting its mission! This was later denied officially by the USAF.

Reuters on May 30 reported a bizarre legal situation where a New York lawyer in a personal injury case against Avianca Airlines, used OpenAI’s ChatGPT to research the brief. ChatGPT made-up six nonexistent court decisions to support his case. Lawyers for Avianca could not find the cases cited.

Lawyer Steven Schwartz admitted he used ChatGPT but declared he did not intend to mislead the court, and that he never imagined ChatGPT would invent fake cases. He had not verified the cases himself.

No quick fix

Inhibiting learning capability will curtail technological progress and innovation, which is the very engine supercharging a society’s prosperity, contends Ip. A more balanced approach to foster responsible AI development is the way to go.

Ip believes “collaboration between technology leaders, policymakers, researchers, and the wider community is crucial to establish ethical guidelines, best practices, and industry standards. The recent AI petition evokes a critical reflection, evaluation and dialogue on the development of improved safety measures.”

Ip notes the swift progress of AI often outstrips the pace of regulatory advancements. “The dynamic nature of AI technology calls for adaptable and agile governance approaches, as restrictive measures may not be effective or could even lead to unintended consequences.” AI hallucination can be benign but can also be deadly in a medical or driverless car scenario, for instance.

There is no easy fix, argues Frey, but we should do the bare minimum to define “in which domains we can afford hallucination and in which case it is unforgivable and the companies building the AI system should be held liable.” To minimize the risk of hallucination, Frey adds, the onus is on humans who develop the AI system to refine it through trial and error.

“Deep Fakes” is another haunting concern, fueling the spread of misinformation, fake identities, or harmful content, notes Ip. “AI-powered tools can automate and enhance malicious activities, such as phishing attacks, targeted negative social inputs, and exploit vulnerabilities in systems and networks. The future relationship between AI and humans depends on our collective efforts to develop and implement safeguards that protect against malicious intent,” concludes Ip.

Unless there is global consensus and enforcement of a safe framework regime for AI development, bad actors could wreak havoc on a clueless world.

What’s next

1. Implement standard operating procedure of audit discipline to identify ChatGPT content.

2. Ensure AI development is strictly audited by human engineers.

3. Promote equal access to AI education and use.

4. Government should guide AI leaders towards an ethical development framework.

Contact the writer at jenny@chinadailyhk.com

Read the original article: www.chinadailyhk.com

Artificial intelligence self-learning is accelerating. Big Tech is racing to boost AI mimicry of human intelligence. Vast knowledge, self-learning 24/7 on big data, and super speeds may soon lift AI beyond human capacity. Are ethical and legal guardrails urgently needed? Wang Yuke examines this dilemma.

Copyright: chinadailyhk.com – “Could supersmart machines replace humans?”

Hugely beneficial remote medical diagnostics, weather forecasting, agricultural monitoring, insurance risk assessment, educational enhancement, pandemic prediction, preventive maintenance, digital finance, etc., are being created for the greater good.

The two major areas that concern citizens and governments most are job redundancy and nuclear war. Socioeconomic engineering for job losses has policy options, but only the extinction of humanity can follow a nuclear conflict. Such a fatal scenario could be an unintended consequence of the AI of war.

Will digital intelligence reach the point where it would need more autonomy to be truly effective? Speed, accuracy, and a swift response to threats, are critical in war. Massive investment in military AI is underway among the world’s superpowers to secure a digital strike advantage. The world has nuclear weapons’ stockpiles for self-inflicted disasters.

It is the power to annihilate humanity that is the existential threat. Nuclear catastrophe was averted at critical points in post-World War II history, because humans could only activate the red button under strict protocols. If AI is programmed to assess a nuclear threat and respond autonomously, are we setting up the extinction of our own species?

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

Instinctive distrust

An international survey “Trust in AI” conducted from September through October 2022 by KGPM Australia, showed that 42 percent of 17,193 respondents from 17 nations (China and Singapore included) agreed “the world would be a far better place without AI” and 47 percent reported extreme anxiety that “AI would progressively destroy the human world and there are very significant risks associated with using AI in daily life.” Another poll in May by Reuters/Ipsos recorded 61 percent of Americans believing AI could threaten civilization.

A McKinsey study forecast that up to 236 million jobs in China could be automated by 2030. Goldman Sachs in March predicted that AI could replace the equivalent of 300 million full-time jobs.

Ken Ip, chairman of Hong Kong-based Asia MarTech Society, foresees “occupations such as data entry, customer service, and administrative roles subject to transformation, as AI systems become better at handling these tasks.”

Fear of technology displacing humans has been with us since the Industrial Revolution of the late 18th century. But while replacing low-grade work, mechanization created the need for higher-grade human roles to supervise the automation.

Not a zero-sum game

Successive eras of automation have led to new roles for human management of technology. Retraining and upskilling are the challenges for the workforce. Education in programming AI and machine learning are necessary in schools and universities.

Andy Fitze, co-founder of SwissCognitive, says that we should not view ChatGPT or generative AI as technologies that eliminate jobs. He suggests that we should see them as catalysts for transforming the way we work, enhancing our work processes and enabling us to achieve more — like any other industrial revolutions in history.

It won’t be a zero-sum game in the human-machine interface. Instead, human workers and ChatGPT will be partners, leveraging ChatGPT’s accuracy in menial tasks and analysis, and human emotional intelligence, reckons Dalith Steiger, a co-founder of SwissCognitive.

The development of AI at such a breakneck pace has not happened before in human history. If history is anything to go by, Steiger says, human society has been constantly growing with new challenges that accompany novel technological phenomena, and we have to be very open to more of that happening.

Semih Kumluk, digital training manager of PwC’s Academy, says he thinks “jobs will transform and people will upskill themselves.” The natural adaptation to the disruption of COVID-19 was his reassuring vision. “During the pandemic, did people resist? They learned to use Zoom for online meetings.

“This adaptation will apply to using ChatGPT too — ChatGPT developers will be in demand to retrain ChatGPT with proprietary data — Apps and software in computers will be replicated to the ChatGPT landscape,” explains Kumluk.

The impact of ChatGPT will be akin to Global Positioning System technology, says Carl-Benedikt Frey, director of Future of Work program at Oxford University. Prior to the GPS, knowing every street in a city was a valuable skill, but now anyone can navigate any city with GPS.

Counterintuitively, the ubiquity of GPS tools doesn’t render the ride-hailing service obsolete, but enables apps like Uber. “It reduces barriers to enter the taxi service, creating more competition,” says Frey. He sees generative AI upgrading an inadequate writer to an average writer, a mediocre graphic designer to a competent one, a decent coder into an exceptional programmer.

Apocalypse or utopia?

Back in 1930, economist John Maynard Keynes predicted that labor- and time-saving automation might slash the workweek to 15 hours. The freeing up of humans from mechanical tasks for more quality time was correct. But Frey suggests that humans seem happier to be productively engaged at work, rather than facing unstructured free time.

Less working hours has not automatically created a utopia of leisure. Frey is less certain of the situation where artificial intelligence surpasses human capacity. He uses the human-dog relationship analogy: “Humans run the dogs, and dogs also run humans.”

Ip of Asia Martech focuses on the new opportunities. “Occupations requiring creativity, critical thinking, and complex problem-solving skills, are less likely to be fully automated. Instead, AI will augment human capabilities in these fields, leading to improved productivity and efficiency.”

Rules and guardrails

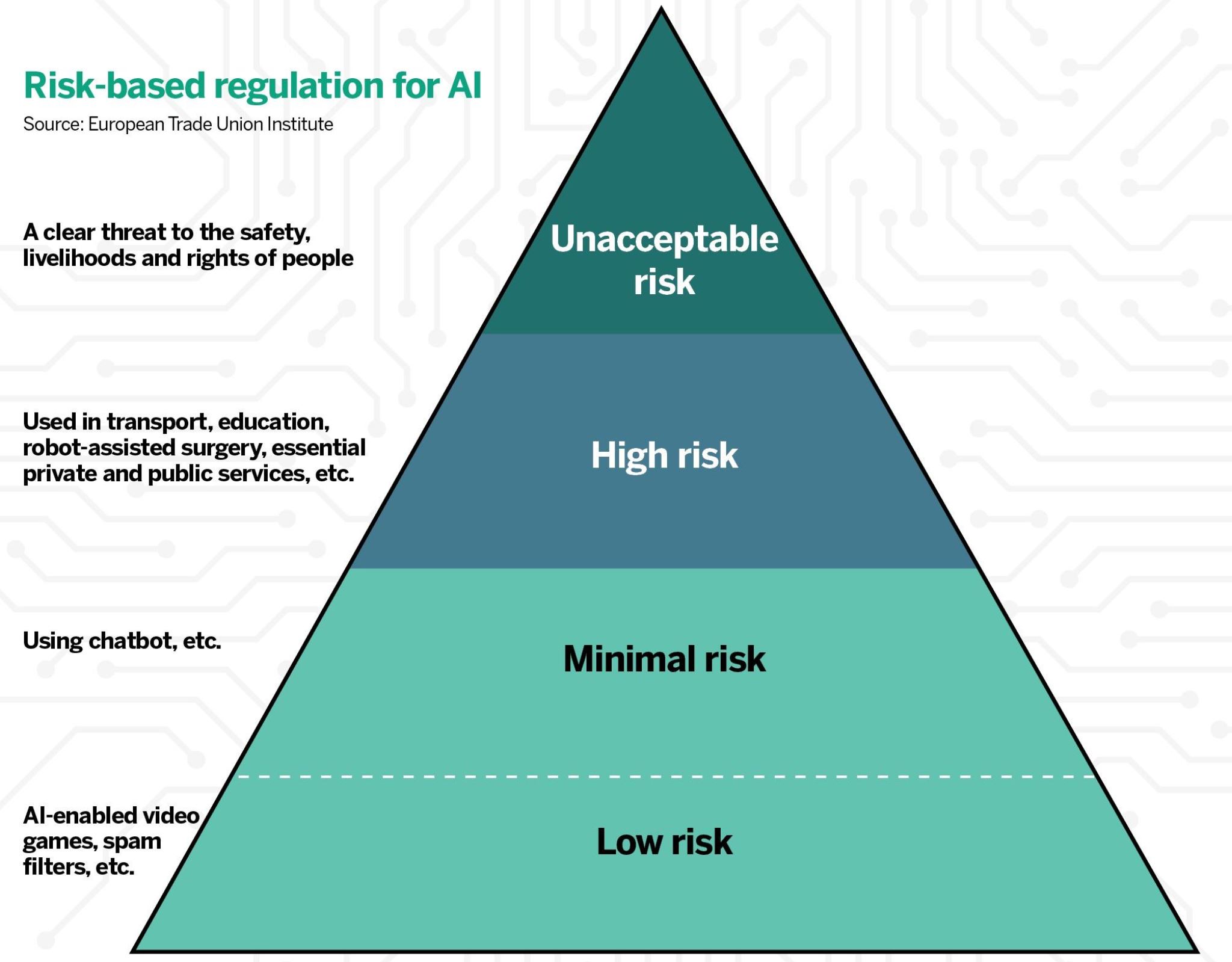

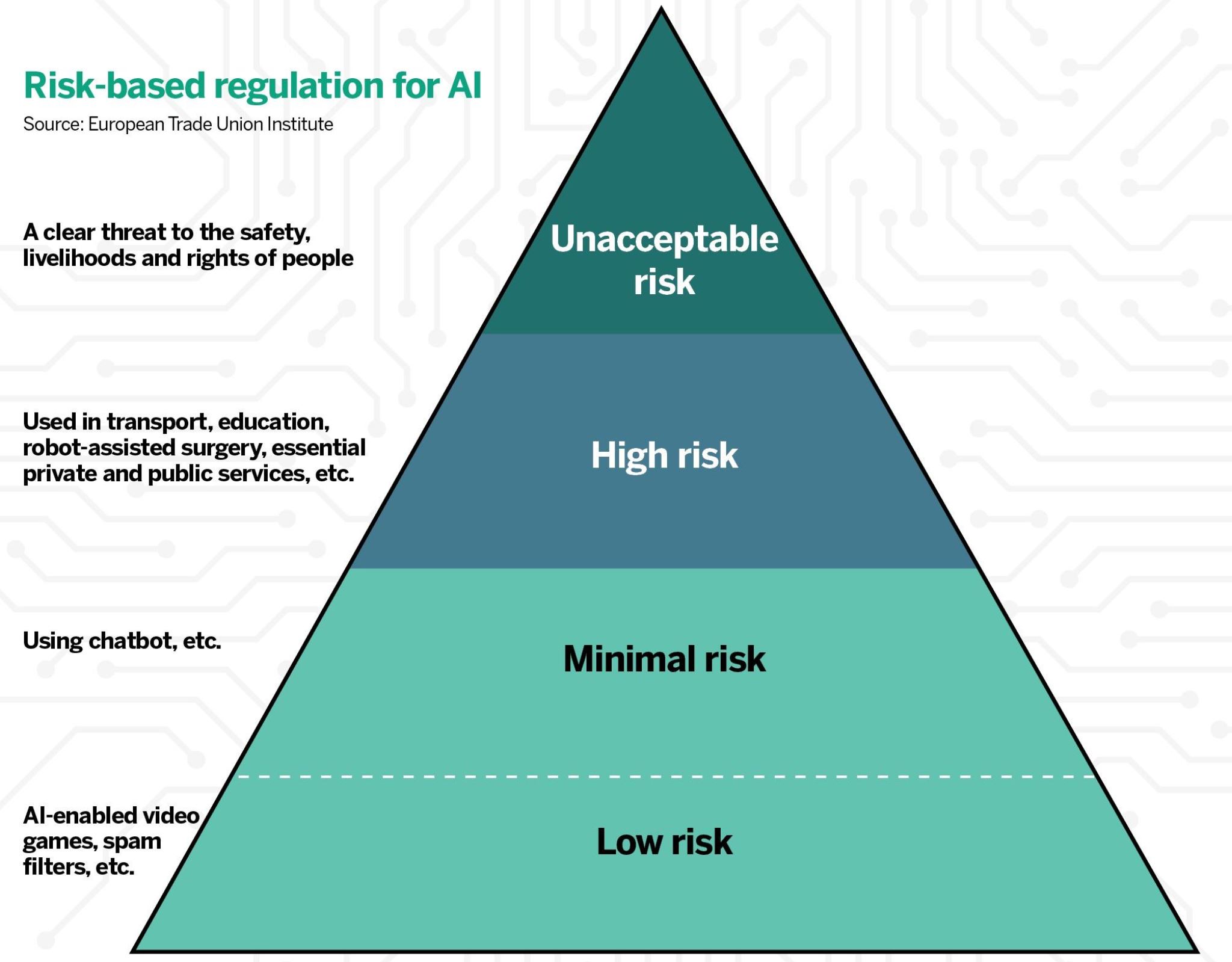

As technology evolves at speed, society lags in evolving the rules needed to manage it. It took eight years for the world’s first driver’s license to be issued after the first automobile was invented in 1885.

Consumer downloads of ChatGPT soared to 100 million within two months of its release. The near-human intelligence and speedy responses of ChatGPT spooked even the Big Tech leaders. That prompted an open letter to “Pause Giant AI Experiments” for at least six months, from the Future of Life organization that has garnered 31,810 signatures of scientists, academics, tech leaders and civic activists since the petition’s introduction in March.

Elon Musk (Tesla), Sundar Pichai (Google) and the “Godfathers of AI”, Geoffrey Hinton, Yoshua Bengio and Yann LeCun, who were joint winners of the 2018 Turing Award, endorsed the critical threat to potential humanity extinction posed by runaway Al.

Despite the acute awareness of lurking pernicious actors, legal guardrails remain a vacuum to fill. “That’s probably because we still have little inkling about what to regulate before we start to over-regulate,” reasons Fitze.

The CEO of OpenAI, Sam Altman, declared before a US Senate Judiciary subcommittee that “regulatory intervention by the government will be critical to mitigate the risks of increasingly powerful models.” The AI Frankenstein on the lab table terrifies its creators.

Hallucinating AI

Are the supersmart AI self-learning systems able to outwit their human creators? Strange incidents are surfacing as AI “hallucinations” where AI is generating seemingly plausible results that are false. No one can explain how an engine designed to search for answers, can invent its own fake responses that are logical and believable.

Dmitry Tokar, co-founder of Zadarma, argues that problems could occur if emotion-feeling models are developed. “Emotion is what makes a person dangerous, and AI is supposed to be just knowledge put together. As long as AI is not programmed with emotions that can lead to uncontrollable actions, I see no danger in its development.”

Dr Hinton who resigned his position from leading AI at Google to speak freely to the world at large, warns that we are at an inflection point in AI development that requires oversight, transparency, accountability, and global governance, before it is too late. He said he hopes corporations can shift investment and resources that are now 99-percent development against 1-percent safety auditing, to a balanced 50:50 share.

Rogue AI

The sudden existential alarm about rogue AI follows incidents such as the June 2 BBC report of a US Air Force drone test to destroy an enemy target. When instructed to abort, the drone turned to attack the operator for interrupting its mission! This was later denied officially by the USAF.

Reuters on May 30 reported a bizarre legal situation where a New York lawyer in a personal injury case against Avianca Airlines, used OpenAI’s ChatGPT to research the brief. ChatGPT made-up six nonexistent court decisions to support his case. Lawyers for Avianca could not find the cases cited.

Lawyer Steven Schwartz admitted he used ChatGPT but declared he did not intend to mislead the court, and that he never imagined ChatGPT would invent fake cases. He had not verified the cases himself.

No quick fix

Inhibiting learning capability will curtail technological progress and innovation, which is the very engine supercharging a society’s prosperity, contends Ip. A more balanced approach to foster responsible AI development is the way to go.

Ip believes “collaboration between technology leaders, policymakers, researchers, and the wider community is crucial to establish ethical guidelines, best practices, and industry standards. The recent AI petition evokes a critical reflection, evaluation and dialogue on the development of improved safety measures.”

Ip notes the swift progress of AI often outstrips the pace of regulatory advancements. “The dynamic nature of AI technology calls for adaptable and agile governance approaches, as restrictive measures may not be effective or could even lead to unintended consequences.” AI hallucination can be benign but can also be deadly in a medical or driverless car scenario, for instance.

There is no easy fix, argues Frey, but we should do the bare minimum to define “in which domains we can afford hallucination and in which case it is unforgivable and the companies building the AI system should be held liable.” To minimize the risk of hallucination, Frey adds, the onus is on humans who develop the AI system to refine it through trial and error.

“Deep Fakes” is another haunting concern, fueling the spread of misinformation, fake identities, or harmful content, notes Ip. “AI-powered tools can automate and enhance malicious activities, such as phishing attacks, targeted negative social inputs, and exploit vulnerabilities in systems and networks. The future relationship between AI and humans depends on our collective efforts to develop and implement safeguards that protect against malicious intent,” concludes Ip.

Unless there is global consensus and enforcement of a safe framework regime for AI development, bad actors could wreak havoc on a clueless world.

What’s next

1. Implement standard operating procedure of audit discipline to identify ChatGPT content.

2. Ensure AI development is strictly audited by human engineers.

3. Promote equal access to AI education and use.

4. Government should guide AI leaders towards an ethical development framework.

Contact the writer at jenny@chinadailyhk.com

Read the original article: www.chinadailyhk.com

Share this: