-

Artificial intelligence (AI) is powering impressive advances in many industries. Ensuring that AI systems are deployed responsibly is an urgent challenge.

-

Certification programmes for AI systems should be a critical part of any regulatory approach.

-

They should be developed by subject matter experts, delivered by independent third parties and have auditable trails.

Copyright: weforum.org – “This underestimated tool could make AI innovation safer”

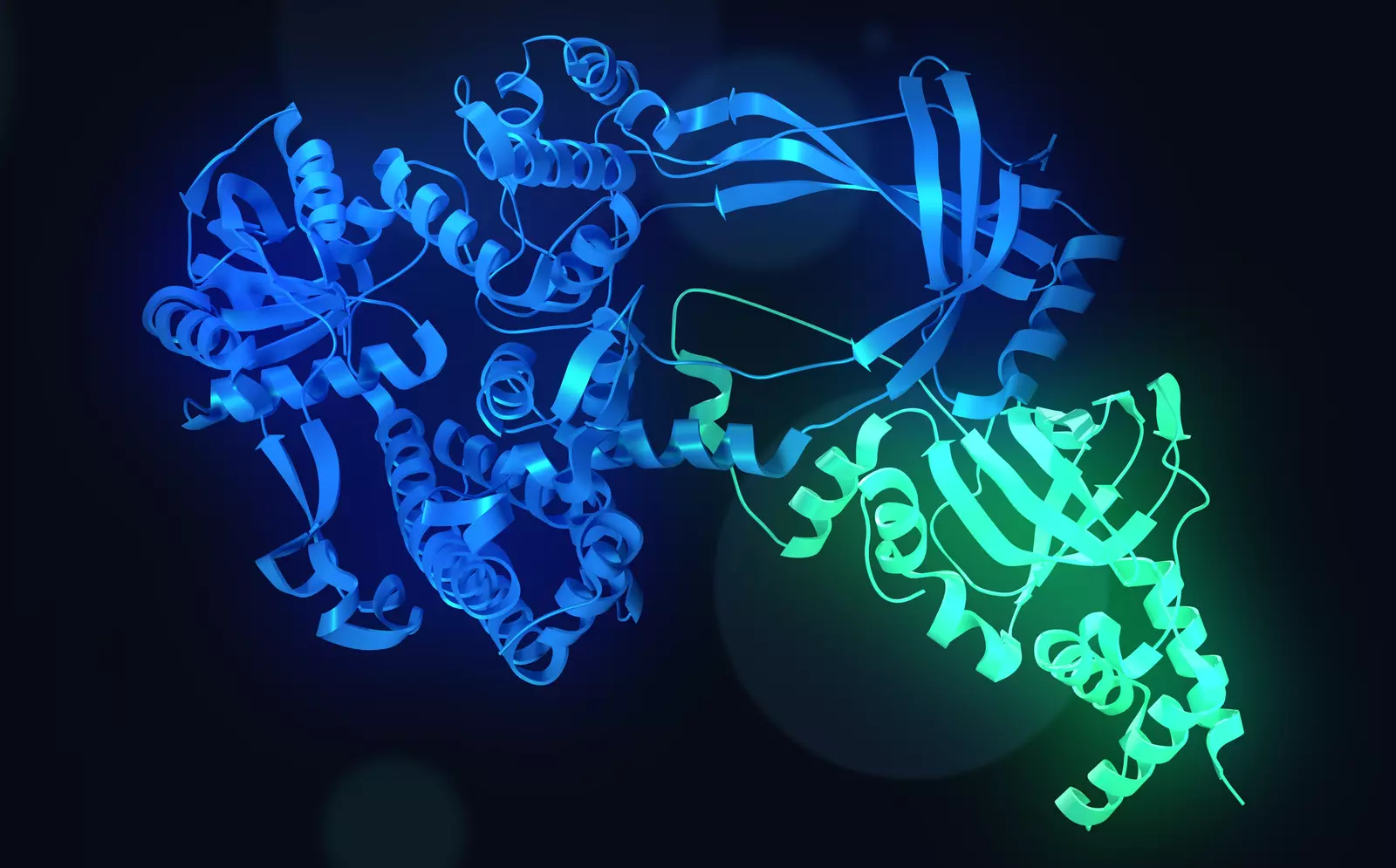

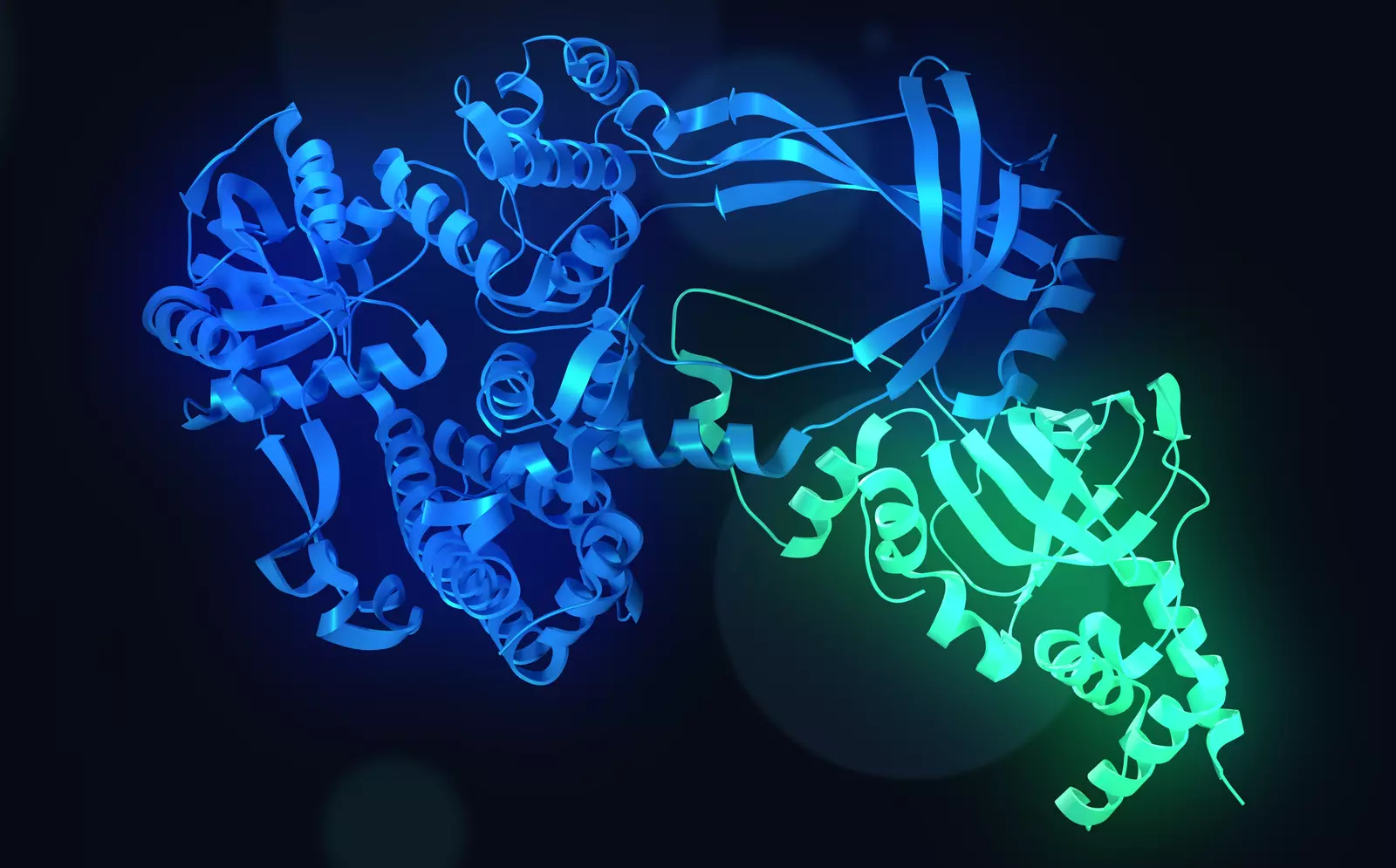

Ensuring that artificial intelligence (AI) systems are deployed responsibly is an urgent challenge. Private investment in AI doubled between 2020 and 2021 and the AI market is expected to expand at a compound annual growth rate of 38.1% until 2030. AI is rapidly powering impressive advances in many industries. For example, DeepMind’s groundbreaking publication of AI predictions of the structures of nearly every known protein is likely to lead to major breakthroughs in drug discovery, pandemic response and agricultural innovation.

Ensuring that artificial intelligence (AI) systems are deployed responsibly is an urgent challenge. Private investment in AI doubled between 2020 and 2021 and the AI market is expected to expand at a compound annual growth rate of 38.1% until 2030. AI is rapidly powering impressive advances in many industries. For example, DeepMind’s groundbreaking publication of AI predictions of the structures of nearly every known protein is likely to lead to major breakthroughs in drug discovery, pandemic response and agricultural innovation.

However, AI systems can be difficult to interpret and can lead to unpredictable outcomes. AI adoption also has the potential to exacerbate existing power disparities. As the pace of AI adoption increases, lawmakers are working hard to put appropriate safeguards in place. This requires a balance between promoting innovation and reducing harm, an understanding of AI’s effects in countless contexts and a long-term vision to address the “pacing problem” of AI as it advances faster than society’s ability to regulate its impacts.

Certification programmes for AI systems

Certification programmes for AI systems should be a critical part of any regulatory approach to AI as they can help achieve all of these goals. To be authoritative, they should be developed by subject matter experts, delivered by independent third parties and have auditable trails.

Responsible AI deployment means different things in different contexts. A chatbot for health insurance enrolment is very different to a self-driving car. By having an AI system certified, an organization will be able to prove to consumers, business partners and regulators that the system complies with applicable regulations, conforms to appropriate standards and meets responsible AI quality and testing requirements as relevant.

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

In other fields, certification programmes and other “soft law” mechanisms have successfully supplemented legislation and helped improve transnational standards.[…]

Read more: www.weforum.org

Artificial intelligence (AI) is powering impressive advances in many industries. Ensuring that AI systems are deployed responsibly is an urgent challenge.

Certification programmes for AI systems should be a critical part of any regulatory approach.

They should be developed by subject matter experts, delivered by independent third parties and have auditable trails.

Copyright: weforum.org – “This underestimated tool could make AI innovation safer”

However, AI systems can be difficult to interpret and can lead to unpredictable outcomes. AI adoption also has the potential to exacerbate existing power disparities. As the pace of AI adoption increases, lawmakers are working hard to put appropriate safeguards in place. This requires a balance between promoting innovation and reducing harm, an understanding of AI’s effects in countless contexts and a long-term vision to address the “pacing problem” of AI as it advances faster than society’s ability to regulate its impacts.

Certification programmes for AI systems

Certification programmes for AI systems should be a critical part of any regulatory approach to AI as they can help achieve all of these goals. To be authoritative, they should be developed by subject matter experts, delivered by independent third parties and have auditable trails.

Responsible AI deployment means different things in different contexts. A chatbot for health insurance enrolment is very different to a self-driving car. By having an AI system certified, an organization will be able to prove to consumers, business partners and regulators that the system complies with applicable regulations, conforms to appropriate standards and meets responsible AI quality and testing requirements as relevant.

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

In other fields, certification programmes and other “soft law” mechanisms have successfully supplemented legislation and helped improve transnational standards.[…]

Read more: www.weforum.org

Share this: