A Cornell research group led by Prof. Peter McMahon, applied and engineering physics, has successfully trained various physical systems to perform machine learning computations in the same way as a computer.

Copyright: cornellsun.com – “Cornell Researchers Train Physical Systems, Revolutionize Machine Learning”

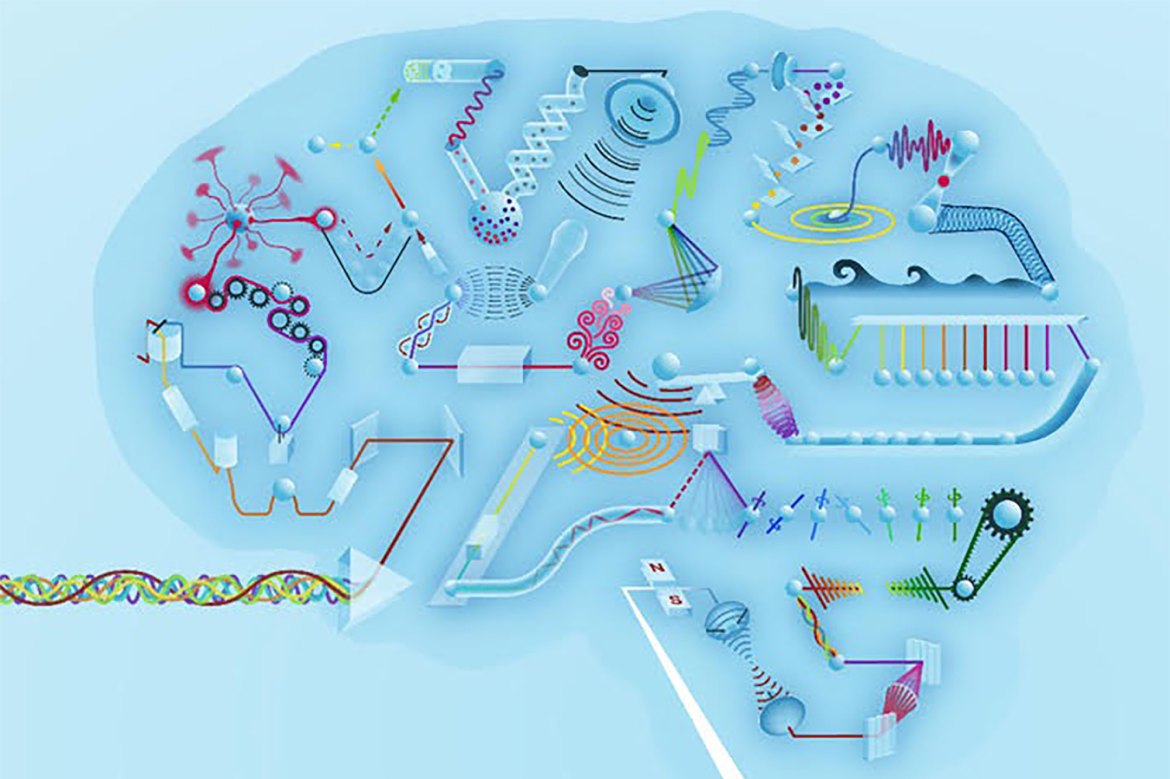

The researchers have achieved this by turning physical systems, such as an electrical circuit or a Bluetooth speaker, into a physical neural network — a series of algorithms similar to the human brain, allowing computers to recognize patterns in artificial intelligence.

The researchers have achieved this by turning physical systems, such as an electrical circuit or a Bluetooth speaker, into a physical neural network — a series of algorithms similar to the human brain, allowing computers to recognize patterns in artificial intelligence.

Machine learning is at the forefront of scientific endeavors today. It is used for a host of real-life applications, from Siri to search optimization to Google translate. However, chip energy consumption constitutes a major issue in this field, since the execution of neural networks, forming the basis of machine learning, uses an immense amount of energy. This inefficiency severely limits the expansion of machine learning.

The research group has taken the first step towards solving this problem by focusing on the convergence of the physical sciences and computation.

The physical systems that McMahon and his team have trained — consisting of a simple electric circuit, a speaker and an optical network — have identified handwritten numbers and spoken vowel sounds with a high degree of accuracy and more efficiency than conventional computers.

According to the recent Nature.com paper, “Deep Physical Neural Networks Trained with Backpropagation,” conventional neural networks are usually built by applying layers of mathematical functions. This relates to a subset of machine learning known as deep learning, in which the algorithms are modeled on the human brain and the networks are expected to learn in the same way as the brain.

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

“Deep learning is usually driven by mathematical operations. We decided to make a physical system do what we wanted it to do – more directly,” said co-author and postdoctoral researcher Tatsuhiro Onodera. […]

Read more: www.cornellsun.com

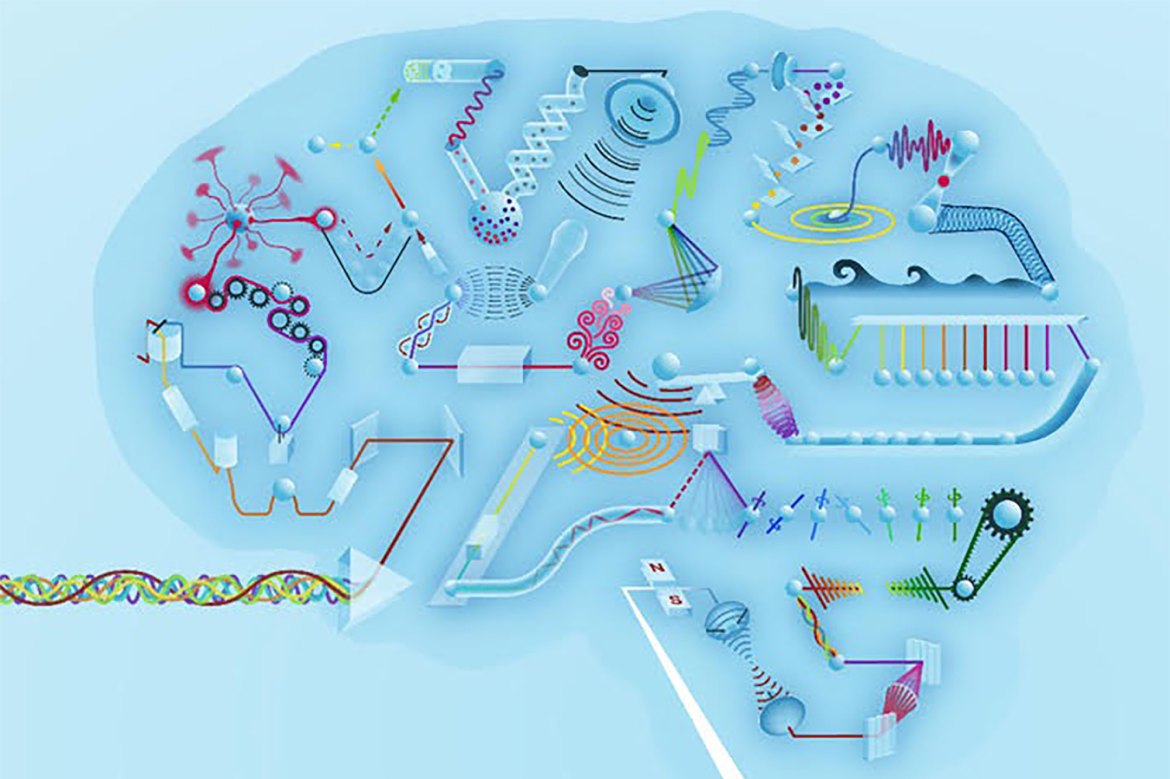

A Cornell research group led by Prof. Peter McMahon, applied and engineering physics, has successfully trained various physical systems to perform machine learning computations in the same way as a computer.

Copyright: cornellsun.com – “Cornell Researchers Train Physical Systems, Revolutionize Machine Learning”

Machine learning is at the forefront of scientific endeavors today. It is used for a host of real-life applications, from Siri to search optimization to Google translate. However, chip energy consumption constitutes a major issue in this field, since the execution of neural networks, forming the basis of machine learning, uses an immense amount of energy. This inefficiency severely limits the expansion of machine learning.

The research group has taken the first step towards solving this problem by focusing on the convergence of the physical sciences and computation.

The physical systems that McMahon and his team have trained — consisting of a simple electric circuit, a speaker and an optical network — have identified handwritten numbers and spoken vowel sounds with a high degree of accuracy and more efficiency than conventional computers.

According to the recent Nature.com paper, “Deep Physical Neural Networks Trained with Backpropagation,” conventional neural networks are usually built by applying layers of mathematical functions. This relates to a subset of machine learning known as deep learning, in which the algorithms are modeled on the human brain and the networks are expected to learn in the same way as the brain.

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

“Deep learning is usually driven by mathematical operations. We decided to make a physical system do what we wanted it to do – more directly,” said co-author and postdoctoral researcher Tatsuhiro Onodera. […]

Read more: www.cornellsun.com

Share this: