read more – copyright by www.artificialintelligence-news.com

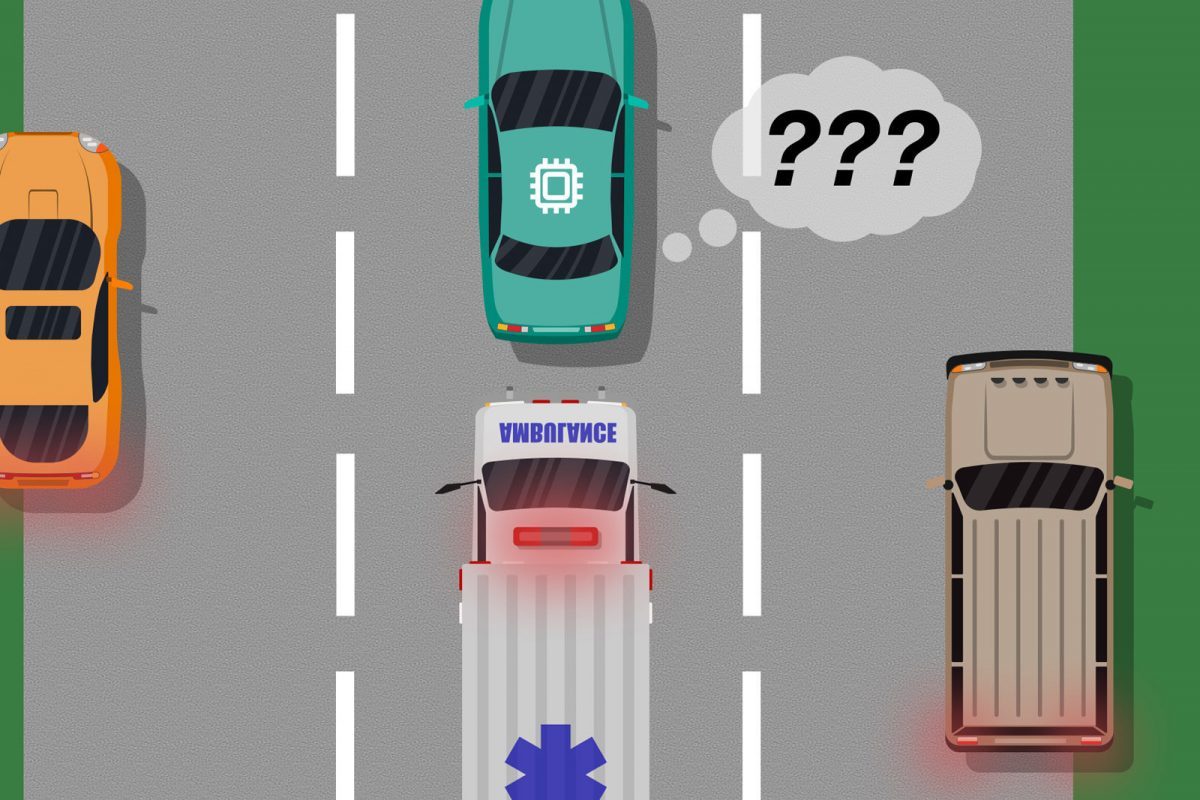

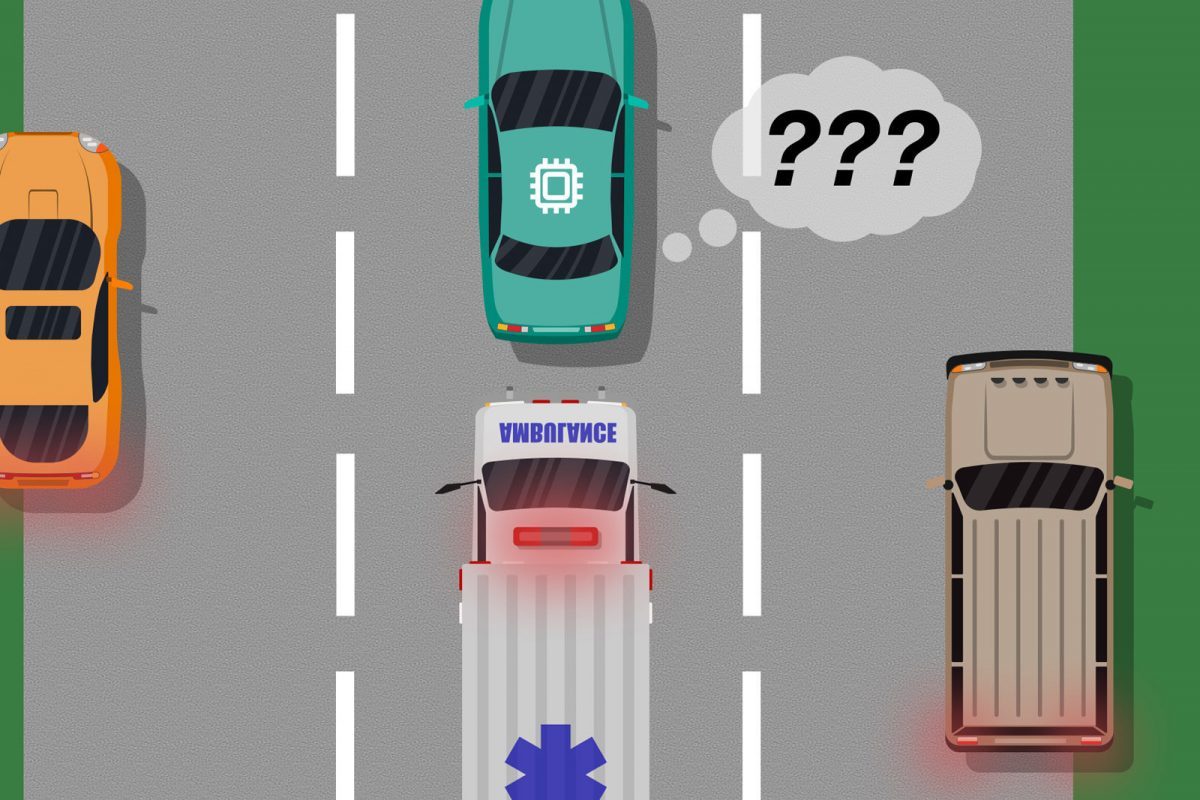

Microsoft and MIT have partnered on a project to fix so-called virtual ‘blind spots’ which lead driverless cars to make errors.

Roads, especially while shared with human drivers, are unpredictable places. Training a self-driving car for every possible situation is a monumental task.

The AI developed by Microsoft and MIT compares the action taken by humans in a given scenario to what the driverless car’s own AI would do. Where the human decision is more optimal, the vehicle’s behaviour is updated for similar future occurrences.

Ramya Ramakrishnan, an author of the report, says: “The model helps autonomous systems better know what they don’t know.

Many times, when these systems are deployed, their trained simulations don’t match the real-world setting [and] they could make mistakes, such as getting into accidents.

The idea is to use humans to bridge that gap between simulation and the real world, in a safe way, so we can reduce some of those errors.”

For example, if an emergency vehicle is approaching then a human driver should know to let them pass if safe to do so. These situations can get complex dependent on the surroundings.

On a country road, allowing the vehicle to pass could mean edging onto the grass. The last thing you, or the emergency services, want a driverless car to do is to handle all country roads the same and swerve off a cliff edge.

Humans can either ‘demonstrate’ the correct approach in the real world, or ‘correct’ by sitting at the wheel and taking over if the car’s actions are incorrect. A list of situations is compiled along with labels whether its actions were deemed acceptable or unacceptable.The researchers have ensured a driverless car AI does not see its action as 100 percent safe even if the result has been so far. Using […]

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

read more – copyright by www.artificialintelligence-news.com

Share this: