A new and unorthodox approach to deal with discriminatory bias in Artificial Intelligence is needed. As it is explored in detail, the current literature is a dichotomy with studies originating from the contrasting fields of study of either philosophy and sociology or data science and programming.

SwissCognitive Guest Blogger: Lorenzo Belenguer

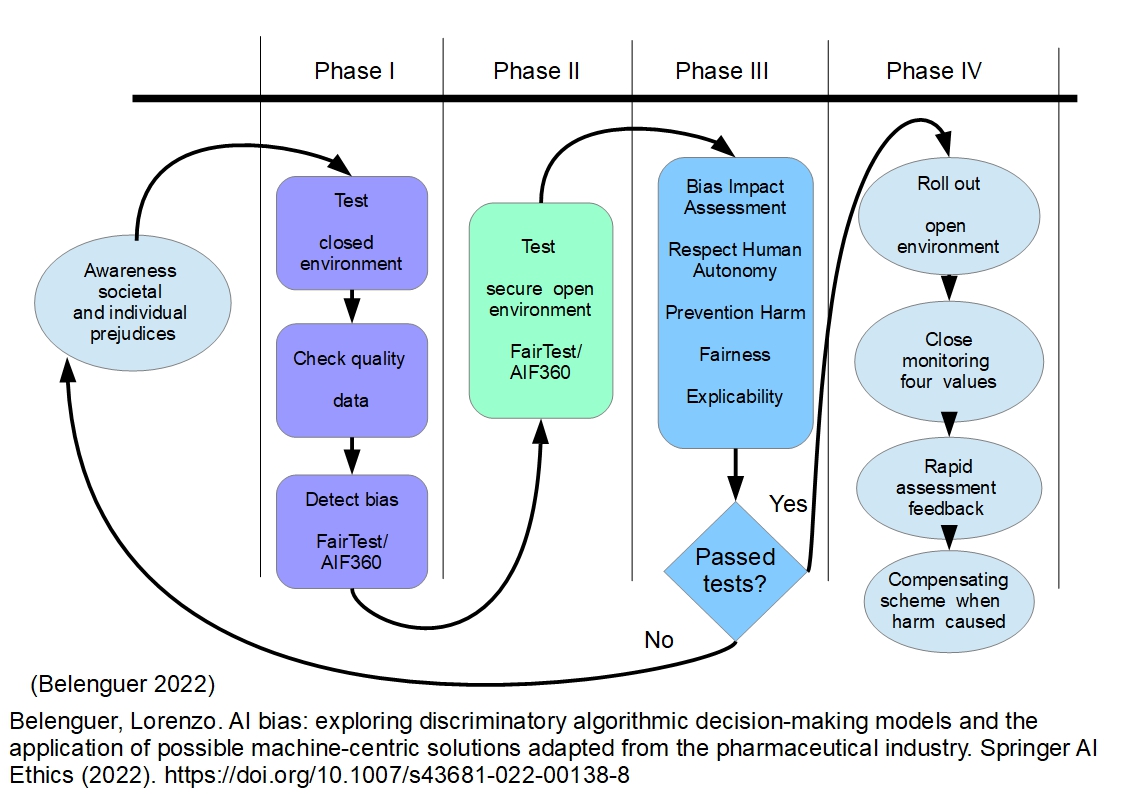

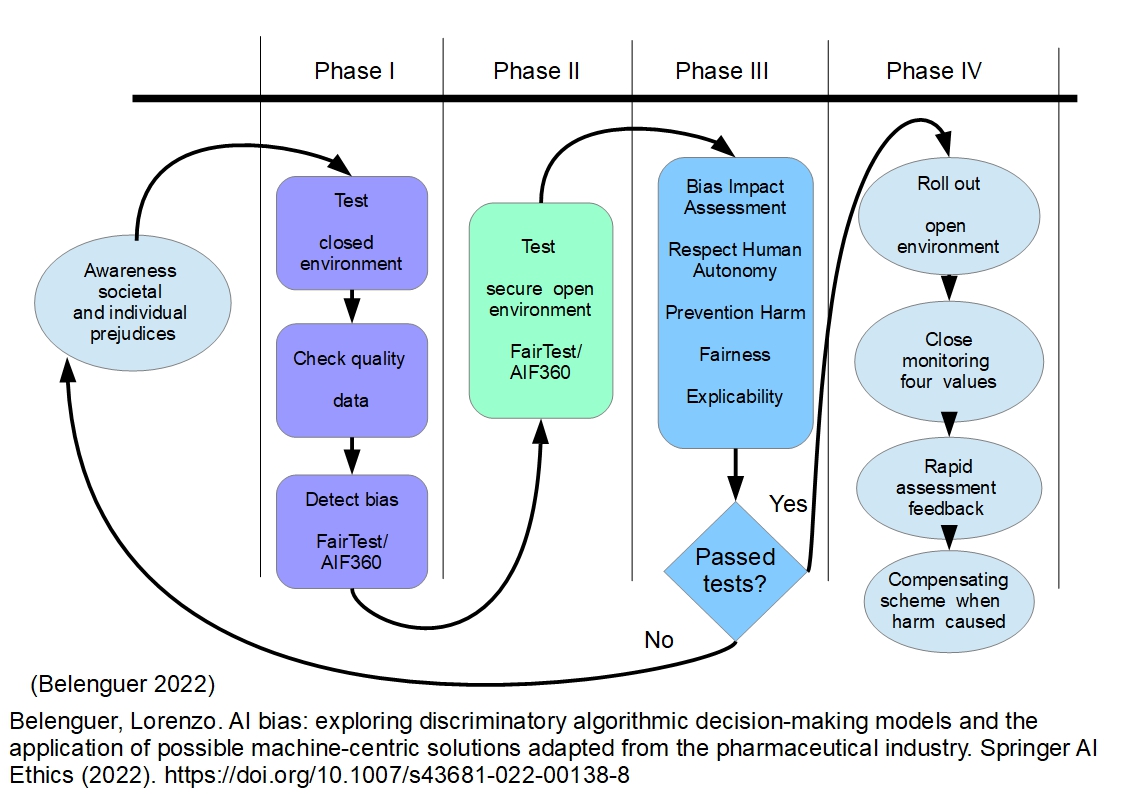

It is suggested that there is a need instead for an integration of both academic approaches, and needs to be machine-centric rather than human-centric applied with a deep understanding of societal and individual prejudices. This article is a novel approach developed into a framework of action: a bias impact assessment to raise awareness of bias and why, a clear set of methodologies as shown in a table comparing with the four stages of pharmaceutical trials, and a summary flowchart. Finally, this study concludes the need for a transnational independent body with enough power to guarantee the implementation of those solutions.

It is suggested that there is a need instead for an integration of both academic approaches, and needs to be machine-centric rather than human-centric applied with a deep understanding of societal and individual prejudices. This article is a novel approach developed into a framework of action: a bias impact assessment to raise awareness of bias and why, a clear set of methodologies as shown in a table comparing with the four stages of pharmaceutical trials, and a summary flowchart. Finally, this study concludes the need for a transnational independent body with enough power to guarantee the implementation of those solutions.

Bias leading to discriminatory outcomes are gaining attention in the AI industry. The let’s-drop-a-model-into-the-system-and-see-how-it-goes is no longer viable. AI has grown with such ubiquity into our daily lives and can, and do, have such dramatic effects in society, that an effective framework of actions to mitigate bias should be compulsory. The most disadvantaged groups tend to be the most affected. If we aim for a more equal and fairer society, we need to stop looking the other way and standardise a set of methodologies. As I explore with more detail in my paper published in the Springer Nature Journal, AI and Ethics, industries with a long history of applied ethics can greatly assist, such as the pharmaceutical industry.

The reader will grasp a better understanding by starting from the flowchart included in this article. The model is inspired by the four stages that a pharmaceutical company will conduct before launching a new medicine and its regulatory follow up. Finally, the whole process is monitored by an independent body like the FDA in the US before being allowed to reach the market. Harm is minimised and as soon as detected, removed. It includes a compensatory scheme if negligence is proven, as we are witnessing with the overprescription of opioid drugs in the US.

Before we start, an awareness of individual and societal prejudices is paramount. I would add a good understanding of the protected groups’ concept. Machines can be biased, because we are and we live in a society that is biased. This is one of the reasons why anthropology is gaining predominance in Ethics AI – especially since historical data is one of the main sources of data to feed ML models.

The first phase would consist of testing the system in a closed environment while checking the quality of the data, and how it has been collected, to train the models. And the first round of detecting bias by specialised algorithms such as FairTest or AIF360.

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

In the second phase, the system is tested secure open environment, and a second round of detecting bias is conducted again by specialised algorithms such as FairTest or AIF360. By the second stage, we are better positioned to unearth its possible flaws and discriminatory outcomes.

As the first and second phases, we are ready to conduct a bias impact assessment in the third phase. Impact assessments are as old as humankind when a hunter would assess an environment to spot any risks and benefits. They can be very helpful in clearly identifying the main stakeholders, their interests and their position of power when blocking or allowing necessary changes and the short- and long-term impacts. If we want to mitigate bias in an algorithmic model, the first step is to be aware of the biases and why they occur. The bias impact assessment does that, and that is why its relevance. It is helpful to provide a list of essential values to facilitate a robust analysis to detect bias, as provided by the EU white paper on Trustworthy AI, 2019 p. 14. They are respect for human autonomy, prevention of harm, fairness and explicability. Those values are further explained in my paper (link provided in the first paragraph).

Once the tests are passed, the AI system can be deployed fully accessible by its users, either a specific group of professionals, such as the HR department, or the general public, for example, credit rating when applying for a mortgage. Finally, the fourth phase is implemented by close monitoring the four values, rapid assessment feedback from its users and a compensating scheme when caused harm can be proven. At this stage, an independent body, on a transnational level is possible, is needed with enough power to guarantee the implementation of those safeguarding methodologies, and their enforcement when not. The time for voluntary cooperation is over, and the time for action is now.

About the Author:

Lorenzo Belenguer is a visual artist and an AI Ethics researcher. Belenguer holds an MA in Artificial Intelligence & Philosophy, and a BA (Hons) in Economics and Business Science.

Lorenzo Belenguer is a visual artist and an AI Ethics researcher. Belenguer holds an MA in Artificial Intelligence & Philosophy, and a BA (Hons) in Economics and Business Science.

A new and unorthodox approach to deal with discriminatory bias in Artificial Intelligence is needed. As it is explored in detail, the current literature is a dichotomy with studies originating from the contrasting fields of study of either philosophy and sociology or data science and programming.

SwissCognitive Guest Blogger: Lorenzo Belenguer

Bias leading to discriminatory outcomes are gaining attention in the AI industry. The let’s-drop-a-model-into-the-system-and-see-how-it-goes is no longer viable. AI has grown with such ubiquity into our daily lives and can, and do, have such dramatic effects in society, that an effective framework of actions to mitigate bias should be compulsory. The most disadvantaged groups tend to be the most affected. If we aim for a more equal and fairer society, we need to stop looking the other way and standardise a set of methodologies. As I explore with more detail in my paper published in the Springer Nature Journal, AI and Ethics, industries with a long history of applied ethics can greatly assist, such as the pharmaceutical industry.

The reader will grasp a better understanding by starting from the flowchart included in this article. The model is inspired by the four stages that a pharmaceutical company will conduct before launching a new medicine and its regulatory follow up. Finally, the whole process is monitored by an independent body like the FDA in the US before being allowed to reach the market. Harm is minimised and as soon as detected, removed. It includes a compensatory scheme if negligence is proven, as we are witnessing with the overprescription of opioid drugs in the US.

Before we start, an awareness of individual and societal prejudices is paramount. I would add a good understanding of the protected groups’ concept. Machines can be biased, because we are and we live in a society that is biased. This is one of the reasons why anthropology is gaining predominance in Ethics AI – especially since historical data is one of the main sources of data to feed ML models.

The first phase would consist of testing the system in a closed environment while checking the quality of the data, and how it has been collected, to train the models. And the first round of detecting bias by specialised algorithms such as FairTest or AIF360.

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

In the second phase, the system is tested secure open environment, and a second round of detecting bias is conducted again by specialised algorithms such as FairTest or AIF360. By the second stage, we are better positioned to unearth its possible flaws and discriminatory outcomes.

As the first and second phases, we are ready to conduct a bias impact assessment in the third phase. Impact assessments are as old as humankind when a hunter would assess an environment to spot any risks and benefits. They can be very helpful in clearly identifying the main stakeholders, their interests and their position of power when blocking or allowing necessary changes and the short- and long-term impacts. If we want to mitigate bias in an algorithmic model, the first step is to be aware of the biases and why they occur. The bias impact assessment does that, and that is why its relevance. It is helpful to provide a list of essential values to facilitate a robust analysis to detect bias, as provided by the EU white paper on Trustworthy AI, 2019 p. 14. They are respect for human autonomy, prevention of harm, fairness and explicability. Those values are further explained in my paper (link provided in the first paragraph).

Once the tests are passed, the AI system can be deployed fully accessible by its users, either a specific group of professionals, such as the HR department, or the general public, for example, credit rating when applying for a mortgage. Finally, the fourth phase is implemented by close monitoring the four values, rapid assessment feedback from its users and a compensating scheme when caused harm can be proven. At this stage, an independent body, on a transnational level is possible, is needed with enough power to guarantee the implementation of those safeguarding methodologies, and their enforcement when not. The time for voluntary cooperation is over, and the time for action is now.

About the Author:

Share this: