The dream of developing machines that can mimic human cognition dates back centuries. In the 1890s, science fiction writers such as H.G. Wells began exploring the concept of robots and other machines thinking and acting like humans

Copyright by www.datamation.com

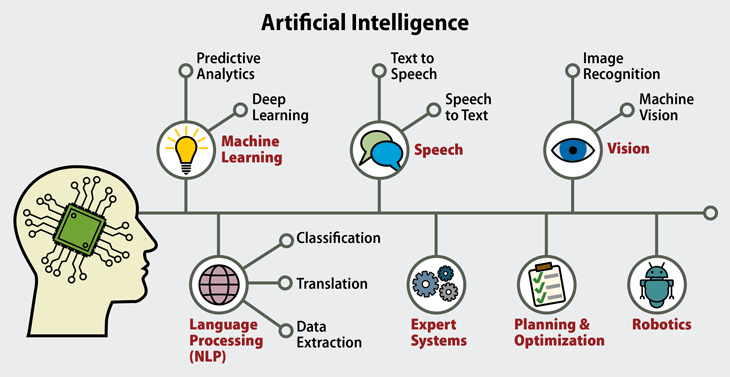

The term artificial intelligence (AI) refers to computing systems that perform tasks normally considered within the realm of human decision making. These software-driven systems and intelligent agents incorporate advanced data analytics and Big Data applications. AI systems leverage this knowledge repository to make decisions and take actions that approximate cognitive functions, including learning and problem solving.

The term artificial intelligence (AI) refers to computing systems that perform tasks normally considered within the realm of human decision making. These software-driven systems and intelligent agents incorporate advanced data analytics and Big Data applications. AI systems leverage this knowledge repository to make decisions and take actions that approximate cognitive functions, including learning and problem solving.

AI, which was introduced as an area of science in the mid 1950s, has evolved rapidly in recent years. It has become a valuable and essential tool for orchestrating digital technologies and managing business operations. Particularly useful are AI advances such machine learning and deep learning.

It’s important to recognize that AI is a constantly moving target. Things that were once considered within the domain of artificial intelligence – optical character recognition and computer chess, for example – are now considered routine computing. Today, robotics, image recognition, natural language processing, real-time analytics tools and various connected systems within the Internet of Things (IoT) all tap AI in order to deliver more advanced features and capabilities.

Helping develop AI are the many cloud companies that offer cloud-based AI services. Statistica projects that AI will grow at an annual rate exceeding 127% through 2025.

By then, the market for AI systems will top $4.8 billion dollars. Consulting firm Accenture reports that AI could double annual economic growth rates by 2035 by “changing the nature of work and spawning a new relationship between man and machine.” Not surprisingly, observers have both heralded and derided the technology as it filters into business and everyday life.

History of Artificial Intelligence: Duplicating the Human Mind

The dream of developing machines that can mimic human cognition dates back centuries. In the 1890s, science fiction writers such as H.G. Wells began exploring the concept of robots and other machines thinking and acting like humans.

It wasn’t until the early 1940s, however, that the idea of artificial intelligence began to take shape in a real way. After Alan Turing introduced the theory of computation – essentially how algorithms could be used by machines to produce machine “thinking” – other researchers began exploring ways to create AI frameworks.

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

In 1956, researchers gathering at Dartmouth College launched the practical application of AI. This included teaching computers to play checkers at a level that could beat most humans. In the decades that followed, enthusiasm about AI waxed and waned.

In 1997, a chess-playing computer developed by IBM, Deep Blue, beat reigning world chess champion, Garry Kasparov. In 2011, IBM introduced Watson, which used far more sophisticated techniques, including deep learning and machine learning, to defeat two top Jeopardy! champions.

Although AI continued to advance over the next few years, observers often cite 2015 as the landmark year for AI. Google Cloud, Amazon Web Services, and Microsoft Azure and others began to step up research and improve natural language processing capabilities, computer vision and analytics tools.

Today, AI is embedded in a growing number of applications and tools. These range from enterprise analytics programs and digital assistants like Siri and Alexa to autonomous vehicles and facial recognition.

Different Forms of Artificial Intelligence

Artificial intelligence is an umbrella term that refers to any and all machine intelligence. However, there are several distinct and separate areas of AI research and use – though they sometimes overlap. These include:

- General AI. These systems typically learn from the world around them and apply data in a cross-domain way. For example, DeepMind, now owned by Google, used a neural network to learn how to play video games similar to how humans play them.

- Natural Language Processing (NLP). This technology allows machines to read, understand, and interpret human language. NLP uses statistical methods and semantic programming to understand grammar and syntax, and, in some cases, the emotions of the writer or those interacting with a system like a chat bot.

- Machine perception. Over the last few years, enormous advances in sensors — cameras, microphones, accelerometers, GPS, radar and more — have powered machine perception, which encompasses speech recognition and computer vision used for facial and object recognition.

- Robotics. Robot devices are widely used in factories, hospitals and other settings. In recent years, drones have also taken flight. These systems — which rely on sophisticated mapping and complex programming—also use machine perception, to navigate through tasks.

- Social intelligence. Autonomous vehicles, robots, and digital assistants such as Siri and Alexa require coordination and orchestration. As a result, these systems must have an understanding of human behavior along with a recognition of social norms. […]

Read more www.datamation.com

The dream of developing machines that can mimic human cognition dates back centuries. In the 1890s, science fiction writers such as H.G. Wells began exploring the concept of robots and other machines thinking and acting like humans

Copyright by www.datamation.com

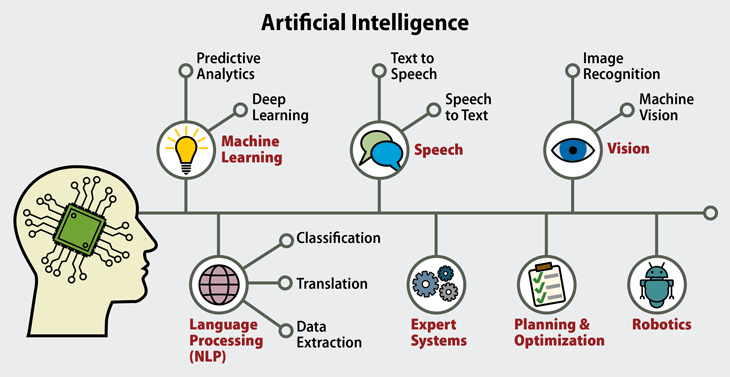

AI, which was introduced as an area of science in the mid 1950s, has evolved rapidly in recent years. It has become a valuable and essential tool for orchestrating digital technologies and managing business operations. Particularly useful are AI advances such machine learning and deep learning.

It’s important to recognize that AI is a constantly moving target. Things that were once considered within the domain of artificial intelligence – optical character recognition and computer chess, for example – are now considered routine computing. Today, robotics, image recognition, natural language processing, real-time analytics tools and various connected systems within the Internet of Things (IoT) all tap AI in order to deliver more advanced features and capabilities.

Helping develop AI are the many cloud companies that offer cloud-based AI services. Statistica projects that AI will grow at an annual rate exceeding 127% through 2025.

By then, the market for AI systems will top $4.8 billion dollars. Consulting firm Accenture reports that AI could double annual economic growth rates by 2035 by “changing the nature of work and spawning a new relationship between man and machine.” Not surprisingly, observers have both heralded and derided the technology as it filters into business and everyday life.

History of Artificial Intelligence: Duplicating the Human Mind

The dream of developing machines that can mimic human cognition dates back centuries. In the 1890s, science fiction writers such as H.G. Wells began exploring the concept of robots and other machines thinking and acting like humans.

It wasn’t until the early 1940s, however, that the idea of artificial intelligence began to take shape in a real way. After Alan Turing introduced the theory of computation – essentially how algorithms could be used by machines to produce machine “thinking” – other researchers began exploring ways to create AI frameworks.

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

In 1956, researchers gathering at Dartmouth College launched the practical application of AI. This included teaching computers to play checkers at a level that could beat most humans. In the decades that followed, enthusiasm about AI waxed and waned.

In 1997, a chess-playing computer developed by IBM, Deep Blue, beat reigning world chess champion, Garry Kasparov. In 2011, IBM introduced Watson, which used far more sophisticated techniques, including deep learning and machine learning, to defeat two top Jeopardy! champions.

Although AI continued to advance over the next few years, observers often cite 2015 as the landmark year for AI. Google Cloud, Amazon Web Services, and Microsoft Azure and others began to step up research and improve natural language processing capabilities, computer vision and analytics tools.

Today, AI is embedded in a growing number of applications and tools. These range from enterprise analytics programs and digital assistants like Siri and Alexa to autonomous vehicles and facial recognition.

Different Forms of Artificial Intelligence

Artificial intelligence is an umbrella term that refers to any and all machine intelligence. However, there are several distinct and separate areas of AI research and use – though they sometimes overlap. These include:

Read more www.datamation.com

Share this: