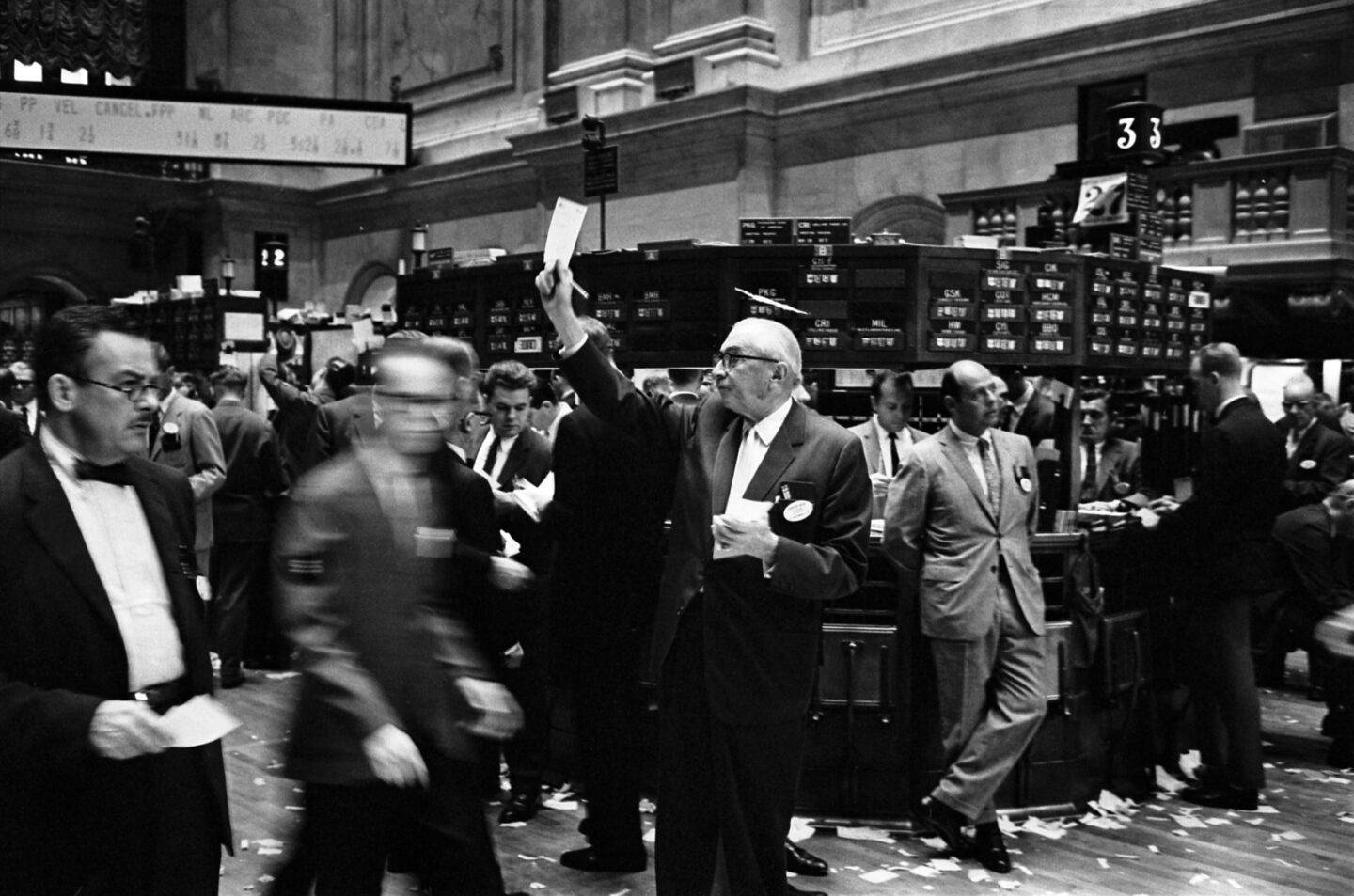

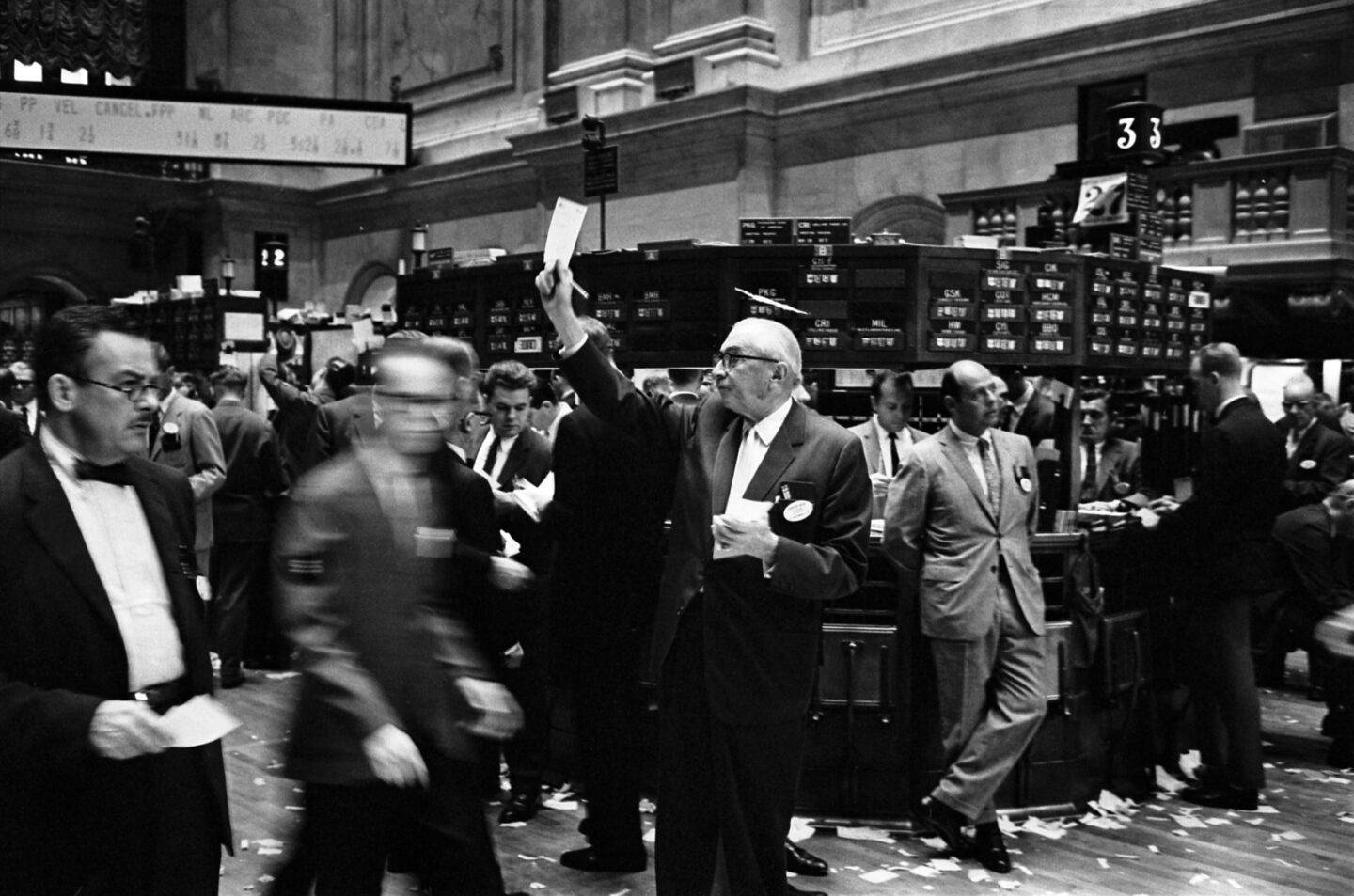

The objective of reinforcement learning of maximizing rewards is in line with game goals. Similarly, it can be applied in finance as well as investments which are based on the same goal of maximizing rewards.

Copyright by analyticsindiamag.com

Ever heard about financial use cases of reinforcement learning, yes but very few. One such use case of reinforcement learning is in portfolio management. Earlier Markowitz models were used, then came the Black Litterman models but now with the advent of technology and new algorithms, reinforcement learning finds its place in the financial arena.

Ever heard about financial use cases of reinforcement learning, yes but very few. One such use case of reinforcement learning is in portfolio management. Earlier Markowitz models were used, then came the Black Litterman models but now with the advent of technology and new algorithms, reinforcement learning finds its place in the financial arena.

Portfolio selection and allocation have been a manual task majorly. Using reinforcement learning, the task of portfolio selection and allocation can be automated wherein the system will provide you with an optimum portfolio which will most likely give you maximum returns.

Reinforcement learning (RL) is a branch of Machine Learning where actions are taken in an environment to maximize the notion of a cumulative reward. It is one of the very important branches along with supervised learning and unsupervised learning. Reinforcement learning consists of several components – agent, state, policy, value function, environment and rewards/returns.

So, the agent is in a particular state and follows some policies to maximize the rewards in any environment. Depending on the actions the agent performs, the agent is either penalized or rewarded depending on his actions align with the objectives. We have always seen reinforcement learning applications in game theory where the player is the agent and simulation of the game works around the environment. The goal of a game could be to win maximum points or reach its destination at the earliest.

The objective of reinforcement learning of maximizing rewards is in line with game goals. Similarly, it can be applied in finance as well as investments which are based on the same goal of maximizing rewards. Chess, Atari, Go and many other similar games use reinforcement learning and are based on the same principles.

Deep Reinforcement Learning in Obtaining Maximum Return from Stocks

Deep reinforcement learning policies can be applied for portfolio selection methods. I have performed an experiment for obtaining a portfolio of stocks that will give maximum returns.

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

Some stocks and their basic OHLC data will form your dataset. This data for the various stocks can be picked up from any particular index which covers a good mix of stocks like Sensex, Nifty etc. The stock portfolio should be created in such a way that it has a mix of low beta value stocks as well as high beta value stocks.

Clustering would be a good option to obtain the different low beta and high beta valued stocks. Once the different clusters are obtained, try to create different combinations of the stocks using permutations and combinations. After the stock selection is done, apply the deep policy network reinforcement learning algorithm on each of those combinations.

Setting up parameters to implement Reinforcement Learning

Let us define the reinforcement learning environment first. The agent will have parameters set up for the usual conventions –

- The state will be the inputs and previous portfolio weights

- The action will consist of the investment weights

- The reward function will be based on the agent’s return – the baseline return and any other proportional returns

Once the parameters are set, the deep reinforcement learning architecture is implemented. The architecture consists of neural network layers that will perform some calculations and provide us with the maximum returns. Four convolution layers can be used for implementing the algorithm, the input to the architecture would be the OHLC data for each of the stocks and 50 time periods of historical data.

Various mathematical operations are performed within the neural network and a cash bias is added to the last layer to make it balanced. A softmax activation function is used in the last layer. The output would be the current state, instant reward at the end of each iteration. When this model is trained by the RL agent, the portfolio weights are cumulatively displayed at the end of each iteration. So when the different combinations are used along with this policy, one portfolio is obtained which gives you the maximum rewards. This portfolio can be used for investments and for higher returns. […]

Read more – analyticsindiamag.com

The objective of reinforcement learning of maximizing rewards is in line with game goals. Similarly, it can be applied in finance as well as investments which are based on the same goal of maximizing rewards.

Copyright by analyticsindiamag.com

Portfolio selection and allocation have been a manual task majorly. Using reinforcement learning, the task of portfolio selection and allocation can be automated wherein the system will provide you with an optimum portfolio which will most likely give you maximum returns.

Reinforcement learning (RL) is a branch of Machine Learning where actions are taken in an environment to maximize the notion of a cumulative reward. It is one of the very important branches along with supervised learning and unsupervised learning. Reinforcement learning consists of several components – agent, state, policy, value function, environment and rewards/returns.

So, the agent is in a particular state and follows some policies to maximize the rewards in any environment. Depending on the actions the agent performs, the agent is either penalized or rewarded depending on his actions align with the objectives. We have always seen reinforcement learning applications in game theory where the player is the agent and simulation of the game works around the environment. The goal of a game could be to win maximum points or reach its destination at the earliest.

The objective of reinforcement learning of maximizing rewards is in line with game goals. Similarly, it can be applied in finance as well as investments which are based on the same goal of maximizing rewards. Chess, Atari, Go and many other similar games use reinforcement learning and are based on the same principles.

Deep Reinforcement Learning in Obtaining Maximum Return from Stocks

Deep reinforcement learning policies can be applied for portfolio selection methods. I have performed an experiment for obtaining a portfolio of stocks that will give maximum returns.

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

Some stocks and their basic OHLC data will form your dataset. This data for the various stocks can be picked up from any particular index which covers a good mix of stocks like Sensex, Nifty etc. The stock portfolio should be created in such a way that it has a mix of low beta value stocks as well as high beta value stocks.

Clustering would be a good option to obtain the different low beta and high beta valued stocks. Once the different clusters are obtained, try to create different combinations of the stocks using permutations and combinations. After the stock selection is done, apply the deep policy network reinforcement learning algorithm on each of those combinations.

Setting up parameters to implement Reinforcement Learning

Let us define the reinforcement learning environment first. The agent will have parameters set up for the usual conventions –

Once the parameters are set, the deep reinforcement learning architecture is implemented. The architecture consists of neural network layers that will perform some calculations and provide us with the maximum returns. Four convolution layers can be used for implementing the algorithm, the input to the architecture would be the OHLC data for each of the stocks and 50 time periods of historical data.

Various mathematical operations are performed within the neural network and a cash bias is added to the last layer to make it balanced. A softmax activation function is used in the last layer. The output would be the current state, instant reward at the end of each iteration. When this model is trained by the RL agent, the portfolio weights are cumulatively displayed at the end of each iteration. So when the different combinations are used along with this policy, one portfolio is obtained which gives you the maximum rewards. This portfolio can be used for investments and for higher returns. […]

Read more – analyticsindiamag.com

Share this: