Thanks to deep learning, the tricky business of making brain atlases just got a lot easier.

copyright by singularityhub.com

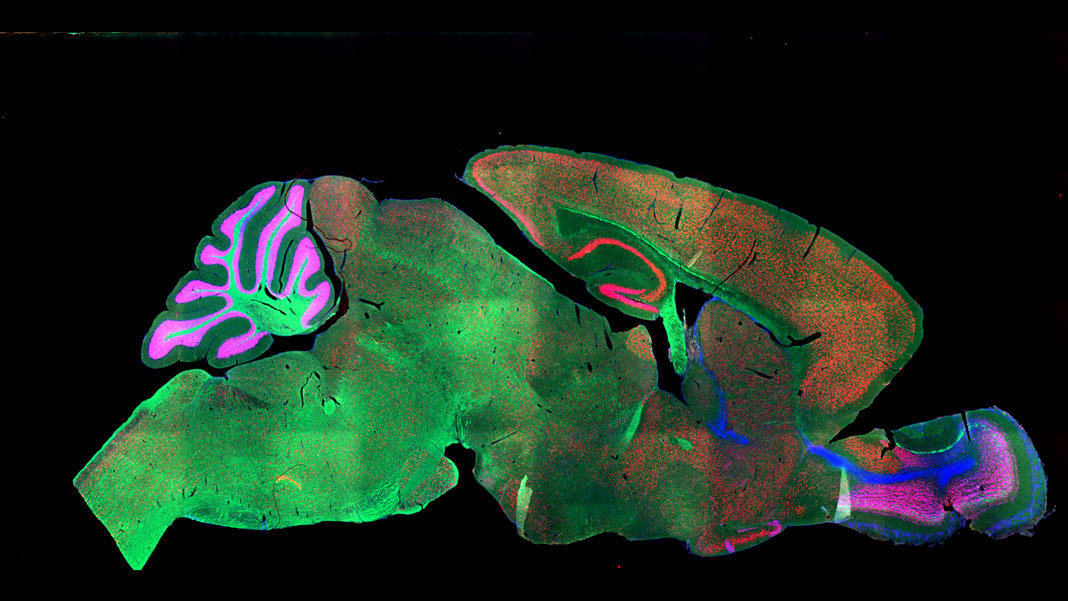

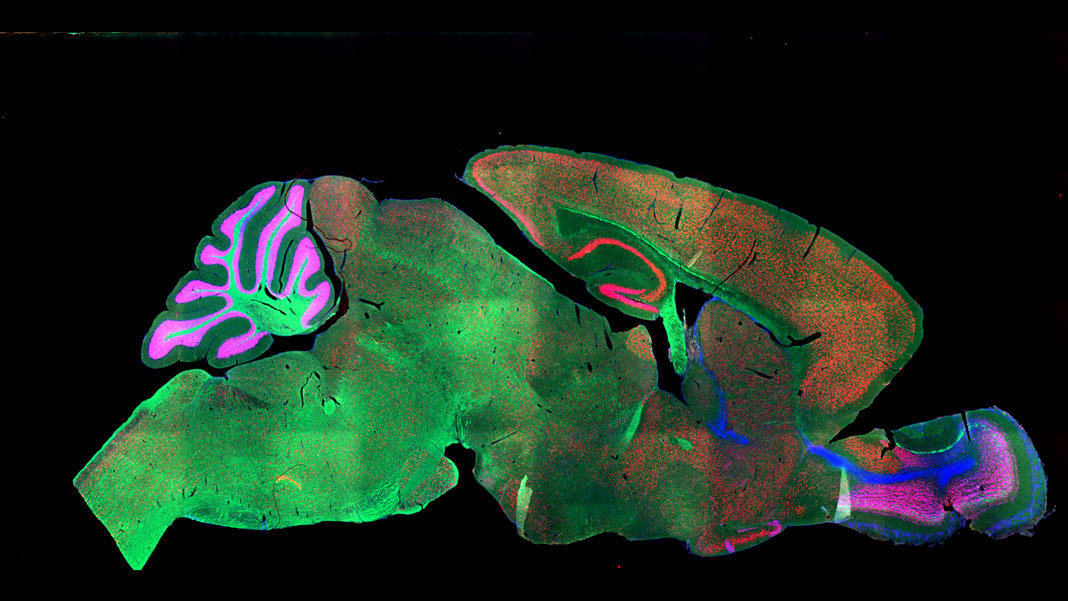

Brain maps are all the rage these days. From rainbow-colored dots that highlight neurons or gene expression across the brain, to neon “brush strokes” that represent neural connections, every few months seem to welcome a new brain map .

Brain maps are all the rage these days. From rainbow-colored dots that highlight neurons or gene expression across the brain, to neon “brush strokes” that represent neural connections, every few months seem to welcome a new brain map .

Without doubt, these maps are invaluable for connecting the macro (the brain’s architecture) to the micro (genetic profiles, protein expression, neural networks) across space and time. Scientists can now compare brain images from their own experiments to a standard resource. This is a critical first step in, for example, developing algorithms that can spot brain tumors, or understanding how depression changes brain connectivity. We’re literally in a new age of neuro-exploration.

But dotting neurons and drawing circuits is just the start. To be truly useful, brain atlases need to be fully annotated. Just as early cartographers labeled the Earth’s continents, a first step in annotating brain maps is to precisely parse out different functional regions.

Unfortunately, microscopic neuroimages look nothing like the brain anatomy coloring books . Rather, they come in a wide variety of sizes, rotations, and colors. The imaged brain sections, due to extensive chemical pre-treatment, are often distorted or missing pieces. To ensure labeling accuracy, scientists often have to go in and hand-annotate every single image. Similar to the pain of manually labeling data for machine learning, this step creates a time-consuming, labor-intensive bottleneck in neuro-cartography endeavors.

No more. This month, a team from the Brain Research Institute of UZH in Zurich tapped the processing power of artificial brains to take over the much-hated job of “region segmentations.” The team fed a deep neural net microscope images of whole mouse brains, which were “stained” with a variety of methods and a large pool of different markers.

Regardless of age, method, or marker, the algorithm reliably identified dozens of regions across the brain, often matching the performance of human annotation. The bot also showed a remarkable ability to “transfer” its learning: trained on one marker, it could generalize to other markers or staining. When tested on a pool of human brain scans, the algorithm performed just as well.

“Our…method can accelerate brain-wide exploration of region-specific changes in brain development and, by easily segmenting brain regions of interest for high-throughput brain-wide analysis, offer an alternative to existing complex … techniques,” the authors said.

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

Um…So What?

To answer that question, we need to travel back to 2010, when the Allen Brain Institute released the first human brain map. A masterpiece 10 years in the making, the map “merged” images of six human brains into a single, annotated atlas that combined the brain’s architecture with dots representing each of the 10,000 genes across the brain.[…]

read more – copyright by singularityhub.com

Thanks to deep learning, the tricky business of making brain atlases just got a lot easier.

copyright by singularityhub.com

Without doubt, these maps are invaluable for connecting the macro (the brain’s architecture) to the micro (genetic profiles, protein expression, neural networks) across space and time. Scientists can now compare brain images from their own experiments to a standard resource. This is a critical first step in, for example, developing algorithms that can spot brain tumors, or understanding how depression changes brain connectivity. We’re literally in a new age of neuro-exploration.

But dotting neurons and drawing circuits is just the start. To be truly useful, brain atlases need to be fully annotated. Just as early cartographers labeled the Earth’s continents, a first step in annotating brain maps is to precisely parse out different functional regions.

Unfortunately, microscopic neuroimages look nothing like the brain anatomy coloring books . Rather, they come in a wide variety of sizes, rotations, and colors. The imaged brain sections, due to extensive chemical pre-treatment, are often distorted or missing pieces. To ensure labeling accuracy, scientists often have to go in and hand-annotate every single image. Similar to the pain of manually labeling data for machine learning, this step creates a time-consuming, labor-intensive bottleneck in neuro-cartography endeavors.

No more. This month, a team from the Brain Research Institute of UZH in Zurich tapped the processing power of artificial brains to take over the much-hated job of “region segmentations.” The team fed a deep neural net microscope images of whole mouse brains, which were “stained” with a variety of methods and a large pool of different markers.

Regardless of age, method, or marker, the algorithm reliably identified dozens of regions across the brain, often matching the performance of human annotation. The bot also showed a remarkable ability to “transfer” its learning: trained on one marker, it could generalize to other markers or staining. When tested on a pool of human brain scans, the algorithm performed just as well.

“Our…method can accelerate brain-wide exploration of region-specific changes in brain development and, by easily segmenting brain regions of interest for high-throughput brain-wide analysis, offer an alternative to existing complex … techniques,” the authors said.

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

Um…So What?

To answer that question, we need to travel back to 2010, when the Allen Brain Institute released the first human brain map. A masterpiece 10 years in the making, the map “merged” images of six human brains into a single, annotated atlas that combined the brain’s architecture with dots representing each of the 10,000 genes across the brain.[…]

read more – copyright by singularityhub.com

Share this: