Machine learning can be used for a multitude of tasks in the payments industry. Things like fraud detection and customer retention are great examples. Recently had the opportunity to talk with George Pliev the CEO of Neurodata Lab about how he and his team are using machine learning to interpret the emotional state of people.

copyright by www.paymentsjournal.com

Does the machine recognize mood with voice, facial features, text?

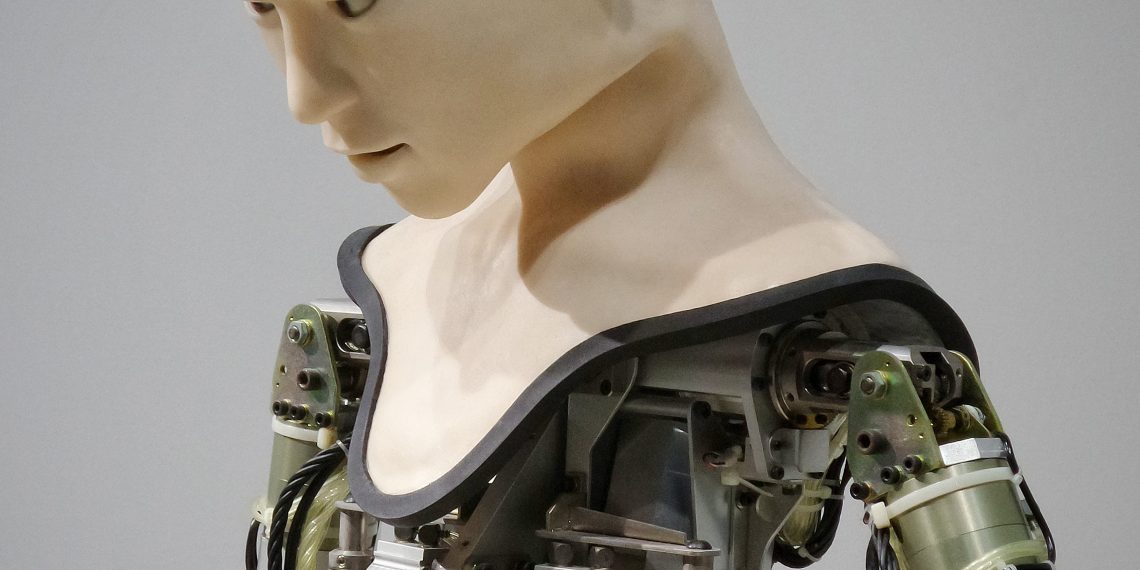

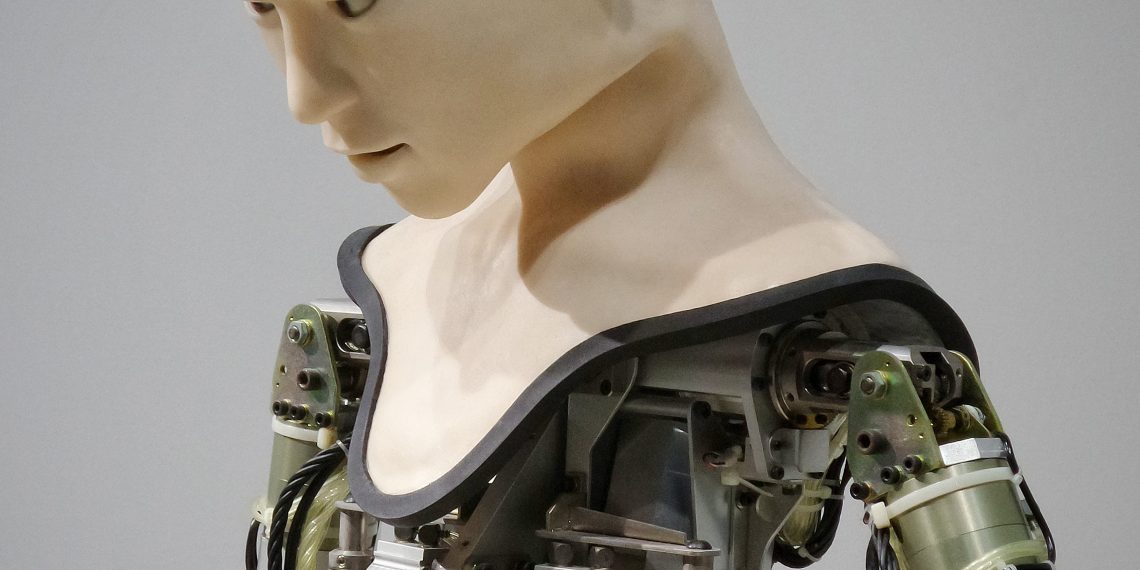

We use multimodal approach to emotion recognition. We developed a cloud-based technology that can recognize emotions by analyzing human data from several channels, including voice, facial expressions, body movements, and psychophysiological parameters. Thus we work

To train a machine learning algorithm, for instance, a neural network, to recognize emotions, a dataset is needed – these are photos, videos or audio data in which people express different emotions. For instance, talk shows or specific research datasets like our RAMAS and Emotion Miner Data Corpus. To train algorithms we use data that has been previously analysed, ‘marked up’, by a large number of people. Based on what kind of emotion most people saw in a particular video fragment, the algorithm will be trained to detect this emotion in new videos that it has not seen before. Neural networks thus learn how to associate a particular set of emotion cues coming from different channels (face, voice, body) with a particular set of emotions and even cognitive states.

How accurate it is?

When analyzing audiovisual content, the algorithm can be some percent sure about an emotional expression. For instance, algorithm can predict with some probability that this person expresses 96% happiness, 3.6% surprise and 0.4% mix of other emotions. The more data the system analyzes, the better it gets at predictions.

In some cases the algorithm can give false predictions, but the potential number of those is tiny. At the same time, multimodal emotion recognition systems are more accurate than unimodal, meaning affective data coming from the face will be confirmed by that received from the voice.

Who is using this type of tech?

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

We collaborate with both big corporations (Rosbank, Société General Group, Microsoft, Samsung) and start-ups (Promobot, a robotics company with whom we are going to CES). They represent the industries where emotional analysis is of big interest and can be used for a number of solutions:

Natural interaction for human robotics, virtual assistants, chatbots

Predictive analytics for HR/recruitment

Customer Experience Management

In-cabin analytics of driver’s state and solutions for self-driving cars

At some point companies ran into the idea that the decision about a purchase is massively influenced not only by what consumers think about the product, but what they actually feel about it. Objective emotional analytics can be an invaluable tool. Neuromarketing instruments used for tiny focus groups were able to provide some cues about how customers feel about products. Emotion analytics open a new era for companies to recognize the emotions of each customer, right at the time of purchase.[…]

read more – copyright by www.paymentsjournal.com

Machine learning can be used for a multitude of tasks in the payments industry. Things like fraud detection and customer retention are great examples. Recently had the opportunity to talk with George Pliev the CEO of Neurodata Lab about how he and his team are using machine learning to interpret the emotional state of people.

copyright by www.paymentsjournal.com

Does the machine recognize mood with voice, facial features, text?

We use multimodal approach to emotion recognition. We developed a cloud-based technology that can recognize emotions by analyzing human data from several channels, including voice, facial expressions, body movements, and psychophysiological parameters. Thus we work

To train a machine learning algorithm, for instance, a neural network, to recognize emotions, a dataset is needed – these are photos, videos or audio data in which people express different emotions. For instance, talk shows or specific research datasets like our RAMAS and Emotion Miner Data Corpus. To train algorithms we use data that has been previously analysed, ‘marked up’, by a large number of people. Based on what kind of emotion most people saw in a particular video fragment, the algorithm will be trained to detect this emotion in new videos that it has not seen before. Neural networks thus learn how to associate a particular set of emotion cues coming from different channels (face, voice, body) with a particular set of emotions and even cognitive states.

How accurate it is?

When analyzing audiovisual content, the algorithm can be some percent sure about an emotional expression. For instance, algorithm can predict with some probability that this person expresses 96% happiness, 3.6% surprise and 0.4% mix of other emotions. The more data the system analyzes, the better it gets at predictions.

In some cases the algorithm can give false predictions, but the potential number of those is tiny. At the same time, multimodal emotion recognition systems are more accurate than unimodal, meaning affective data coming from the face will be confirmed by that received from the voice.

Who is using this type of tech?

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

We collaborate with both big corporations (Rosbank, Société General Group, Microsoft, Samsung) and start-ups (Promobot, a robotics company with whom we are going to CES). They represent the industries where emotional analysis is of big interest and can be used for a number of solutions:

Natural interaction for human robotics, virtual assistants, chatbots

Predictive analytics for HR/recruitment

Customer Experience Management

In-cabin analytics of driver’s state and solutions for self-driving cars

At some point companies ran into the idea that the decision about a purchase is massively influenced not only by what consumers think about the product, but what they actually feel about it. Objective emotional analytics can be an invaluable tool. Neuromarketing instruments used for tiny focus groups were able to provide some cues about how customers feel about products. Emotion analytics open a new era for companies to recognize the emotions of each customer, right at the time of purchase.[…]

read more – copyright by www.paymentsjournal.com

Share this: