For most organisations, the process of cognification is the single biggest challenge of the next five years. Everything will get smarter – in theory. The limitations of existing computer chips, however, is slowing down the process. Put simply, today’s technology simply isn’t up to the job.

copyright by www.wired.co.uk

“What we heard universally was that current hardware was holding developers back,” says Nigel Toon, co-founder of Graphcore , the Bristol-based startup behind a new chip to help speed up the process-hogging, resource-intensive deployment of AI.

“What we heard universally was that current hardware was holding developers back,” says Nigel Toon, co-founder of Graphcore , the Bristol-based startup behind a new chip to help speed up the process-hogging, resource-intensive deployment of AI.

By using cloud computing and vast datasets, some neural networks function sufficiently well. The more powerful AI systems in development, however, struggle to process complex rapid-fire calculations at speed if using computer processing units (CPUs) which work sequentially. Latency, in other words, has slowed.

“For 70 years we have programmed computers to work on instructions step-by-step,” says Toon, 54. AI, however, involves computers learning and adapting from the data they process. Speech is simple enough to understand and can be handled by existing technology. Understanding entire languages and the context in which words are said is more difficult, requiring systems to store data as they go and to delve deep into their memory to understand the background to conversations. “The compute required to learn from data is very different to the traditional process. It’s a completely different type of workload,” says Toon.

Stopgap solutions – including putting the CPU in the cloud to share the workload and using graphics processing units (GPUs) – aren’t fast enough for the rapidly advancing world of AI. Google, Amazon and Apple are already working on hardware to solve this, prompting a flood of VC capital into previously unfashionable chip startups.

Toon’s prior experience – he and co-founder Simon Knowles launched semiconductor company Icera in 2002, later selling it to chip-maker Nvidia for $435 million (£315 million) in 2011 – inspired him to think about the hardware limits artificial intelligence is butting up against.

In 2016, Toon and Knowles met researchers to learn about their frustrations and future plans. The pair decided to work from first principles, thinking less about code and more about the computer itself. Their solution required building an entirely new type of processor – and thinking about computer workloads in a different way.

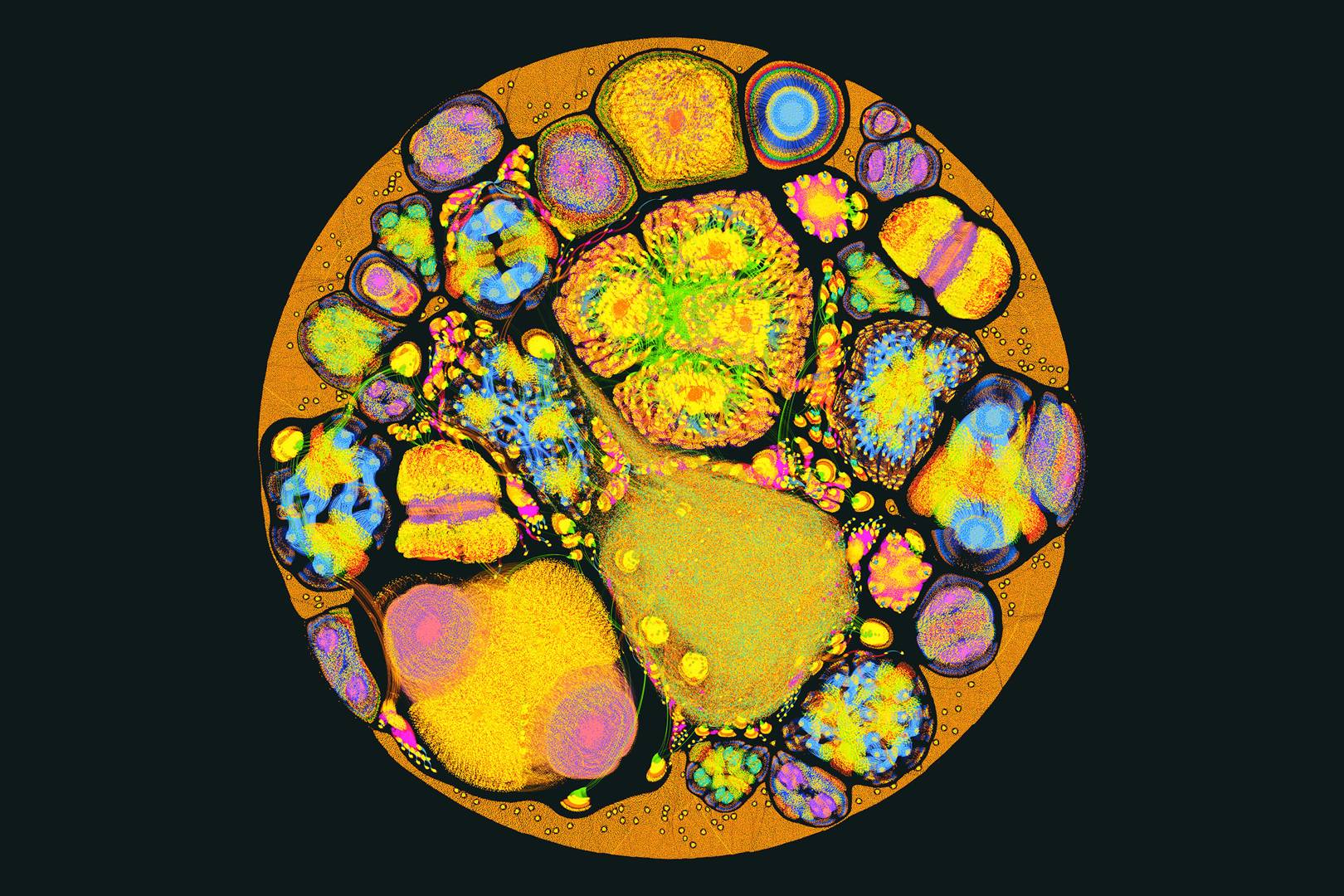

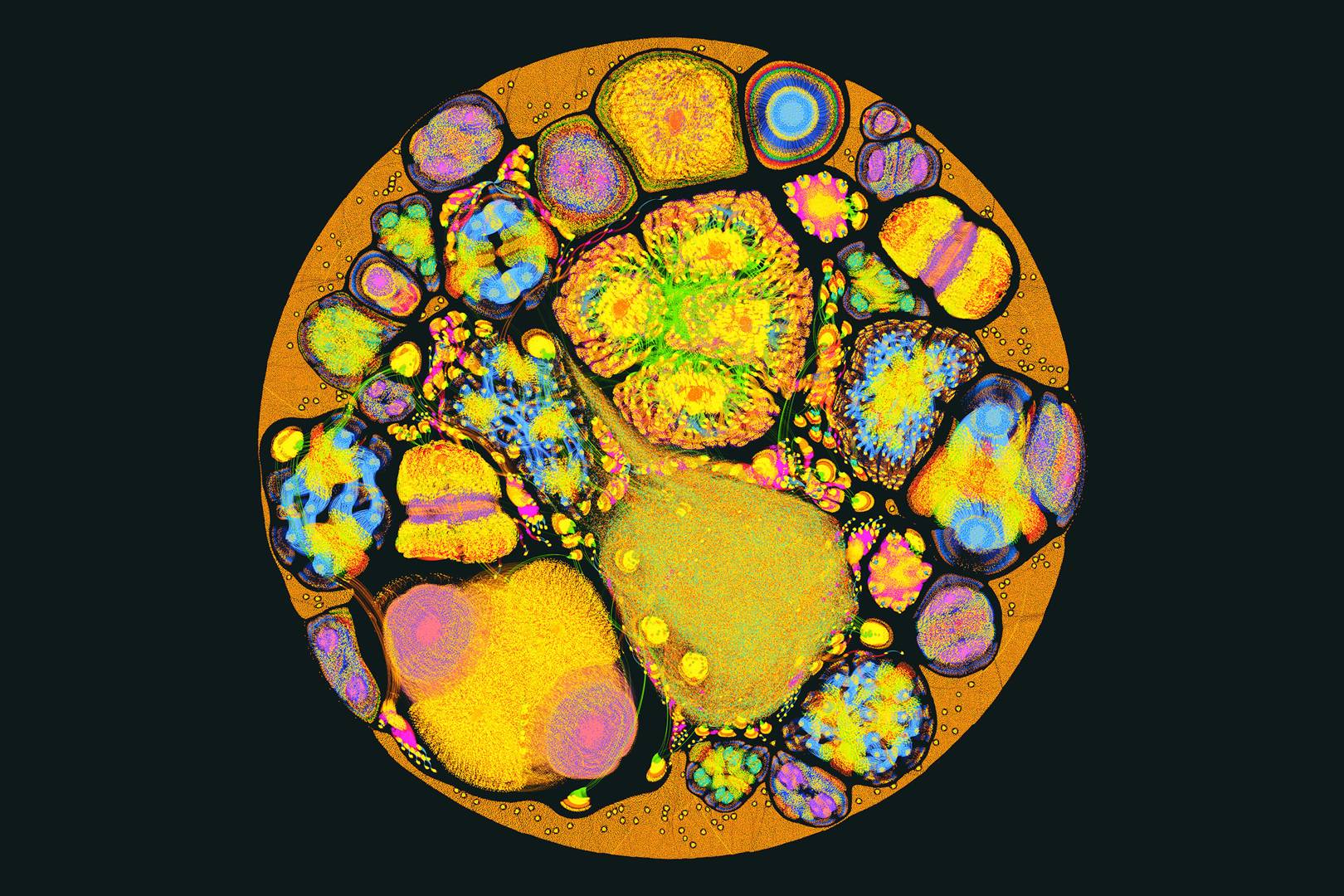

Ordinarily, CPUs solve problems by collecting blocks of data, then running algorithms or logic operations on that information in sequence. Modern quad-core chips have four parallel processors. GPUs, designed for gaming, have parallel processors that can perform multiple tasks at the same time. With AI systems, computers need to pull huge amounts of data in parallel from various locations, then process it quickly. This process is known as graph computing, which focuses on nodes and networks rather than if-then instructions. […]

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

read more – copyright by www.wired.co.uk

For most organisations, the process of cognification is the single biggest challenge of the next five years. Everything will get smarter – in theory. The limitations of existing computer chips, however, is slowing down the process. Put simply, today’s technology simply isn’t up to the job.

copyright by www.wired.co.uk

By using cloud computing and vast datasets, some neural networks function sufficiently well. The more powerful AI systems in development, however, struggle to process complex rapid-fire calculations at speed if using computer processing units (CPUs) which work sequentially. Latency, in other words, has slowed.

“For 70 years we have programmed computers to work on instructions step-by-step,” says Toon, 54. AI, however, involves computers learning and adapting from the data they process. Speech is simple enough to understand and can be handled by existing technology. Understanding entire languages and the context in which words are said is more difficult, requiring systems to store data as they go and to delve deep into their memory to understand the background to conversations. “The compute required to learn from data is very different to the traditional process. It’s a completely different type of workload,” says Toon.

Stopgap solutions – including putting the CPU in the cloud to share the workload and using graphics processing units (GPUs) – aren’t fast enough for the rapidly advancing world of AI. Google, Amazon and Apple are already working on hardware to solve this, prompting a flood of VC capital into previously unfashionable chip startups.

Toon’s prior experience – he and co-founder Simon Knowles launched semiconductor company Icera in 2002, later selling it to chip-maker Nvidia for $435 million (£315 million) in 2011 – inspired him to think about the hardware limits artificial intelligence is butting up against.

In 2016, Toon and Knowles met researchers to learn about their frustrations and future plans. The pair decided to work from first principles, thinking less about code and more about the computer itself. Their solution required building an entirely new type of processor – and thinking about computer workloads in a different way.

Ordinarily, CPUs solve problems by collecting blocks of data, then running algorithms or logic operations on that information in sequence. Modern quad-core chips have four parallel processors. GPUs, designed for gaming, have parallel processors that can perform multiple tasks at the same time. With AI systems, computers need to pull huge amounts of data in parallel from various locations, then process it quickly. This process is known as graph computing, which focuses on nodes and networks rather than if-then instructions. […]

Thank you for reading this post, don't forget to subscribe to our AI NAVIGATOR!

read more – copyright by www.wired.co.uk

Share this: