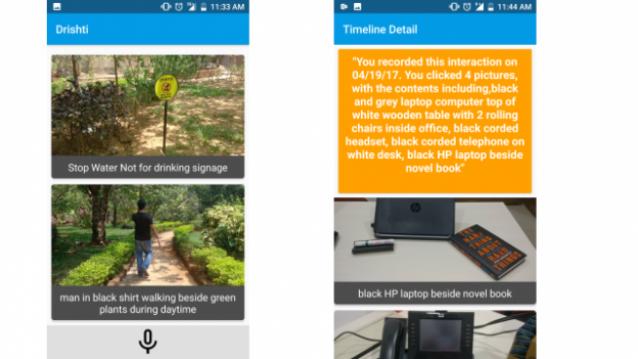

The Drishti app attempts to help the visually impaired make sense of their surroundings.

copyright by tech.firstpost.com

Persistent Systems is a technology services company headquartered in Pune. Every year it hosts a global hackathon called ‘Semicolons’ — which lasts for 24 hours and comprises self-managed teams who ideate and compete against each other to come up with innovative solutions to everyday problems. This year’s theme was Digital Transformation, which saw participation by 45 teams across 11 global centres in 5 countries involving 600+ Persistent employees.

Drishti- a real winner

This year we realised we wanted to focus on deep learning and machine learning. It is a trending technology. We wanted to use it to do something in the social sector. After discussing with the team, we decided to do something for the visually impaired. One of the major applications of deep learning technology is computer vision. It limits itself not just to what is there in the scene but also classifies the scenario and what is happening in the scene looking at the way things are placed. We spent three weeks studying deep learning, learnt how to use it and then we went about building the application, which would be working on how to make the world more accessible to a visually impaired person, by doing certain very specific tasks. The first step was to identify and classify objects and persons and describe the scene with directional guidance.

Artificial Eyes help identify the surroundings

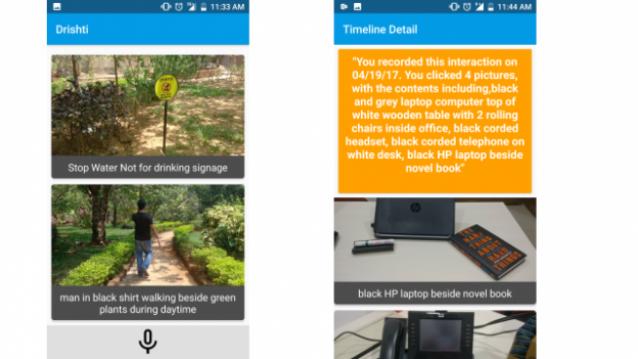

In our app, as soon as the photo is taken, the first level of interpretation is near-instantaneous, in a couple of seconds you get a description of your surroundings. This is not a full blown application, but whatever we could do in 24 hours. So we focused on one user and connected to his Google+ Photos and Facebook accounts to get some contextual information, and then our machine learning algorithm learnt that data. Post that, when one friend came in front of the visually impaired subject, the app could recognise that person.

read more – copyright by tech.firstpost.com

The Drishti app attempts to help the visually impaired make sense of their surroundings.

copyright by tech.firstpost.com

Persistent Systems is a technology services company headquartered in Pune. Every year it hosts a global hackathon called ‘Semicolons’ — which lasts for 24 hours and comprises self-managed teams who ideate and compete against each other to come up with innovative solutions to everyday problems. This year’s theme was Digital Transformation, which saw participation by 45 teams across 11 global centres in 5 countries involving 600+ Persistent employees.

Drishti- a real winner

This year we realised we wanted to focus on deep learning and machine learning. It is a trending technology. We wanted to use it to do something in the social sector. After discussing with the team, we decided to do something for the visually impaired. One of the major applications of deep learning technology is computer vision. It limits itself not just to what is there in the scene but also classifies the scenario and what is happening in the scene looking at the way things are placed. We spent three weeks studying deep learning, learnt how to use it and then we went about building the application, which would be working on how to make the world more accessible to a visually impaired person, by doing certain very specific tasks. The first step was to identify and classify objects and persons and describe the scene with directional guidance.

Artificial Eyes help identify the surroundings

In our app, as soon as the photo is taken, the first level of interpretation is near-instantaneous, in a couple of seconds you get a description of your surroundings. This is not a full blown application, but whatever we could do in 24 hours. So we focused on one user and connected to his Google+ Photos and Facebook accounts to get some contextual information, and then our machine learning algorithm learnt that data. Post that, when one friend came in front of the visually impaired subject, the app could recognise that person.

read more – copyright by tech.firstpost.com

Share this: